Nvidia GeForce Titan review

Monstrous.

Last year's GeForce GTX 680 rewrote the rule book on the levels of gaming performance we should expect from a high-end single-chip graphics card, seeing off AMD's competitors in terms of power while delivering a remarkable level of quietness and efficiency. Less than 12 months on, the firm has topped its own considerable achievement with the release of the GeForce Titan - a consumer-level edition of its £2,800 Tesla K20 "supercomputer" board. The good news is that the gaming version of this phenomenal technology retails for a fraction of the cost with no appreciable cutbacks in its overall capabilities, but the bad news is that it's still twice the price of the GTX 680. The question is, can the case be made for an £800 graphics card? Nvidia reckons so, its marketing positioning Titan as a luxury product that sits atop its current range: ultimate performance at a stratospheric price tag.

Initially, the "Kepler" architecture rolled out in 1536 and 384 CUDA core configurations, aimed at the high-end and mobile/entry-level markets, with mid-range offerings gradually filling in the gap between the two. However, it turns out that the GK104 chip found in the GTX 680 was originally planned to occupy the mid-range space, with another, larger piece of silicon initially slated for the top-end consumer product. When it became apparent that GK104 outpaced AMD's best offerings by quite a margin, it was repositioned as the high-end release, with the original design for the GTX 680 repurposed for the Tesla "supercomputer" line.

Now that larger, more powerful chip - dubbed GK110 - has finally been released into the consumer space, and it's no exaggeration to suggest that in performance terms it's a bit of a monster. Where the GTX 680 features 1536 CUDA cores, the Titan boasts a colossal 2688 - a 75 per cent increase. Onboard GDDR5 RAM gets a threefold boost from the reference design GTX 680's 2GB up to 6GB, while bandwidth is expanded with the move from a 256-bit bus to a meatier 384-bit interface. Transistor count more than doubles from 3.5 billion to 7.1 billion, while ROPs are boosted from 32 to 48, enhancing the card's capabilities in servicing ultra-high resolutions.

"Titan's GK110 processor features 75 per cent more CUDA processors than the old GTX 680 flagship, twice as many transistors and is backed by a threefold increase in ultra- fast GDDR5 RAM."

The only area where the new card gives way to the previous single-chip flagship is in terms of clock-speed. Core clock runs at 837MHz in the Titan, down from 1006MHz in the GTX 680. Similarly, boost speeds (the auto-overclocker that increases performance until thermal limits are reached) drop too - reduced from a maximum of 1058MHz down to a more realistic 876MHz. The bottom line is that the larger chips become, the more heat they produce - paring back clock speeds help keep the system stable.

Benchmarking the beast

Altogether, this impressive barrage of specs suggests that the Titan should offer anything between a 30 to 50 per cent boost compared to the GTX 680 depending on how the hardware is stressed, so let's break out some synthetic and gaming benchmarks to see just how much the design improvements in GK110 translate into actual performance.

Our test rig needs to be powerful enough to get the most out of the GPU while at the same time reflecting the kind of high-end hardware likely to be utilised by someone seriously considering an £800 graphics card - so we used a six-core i7 3970X processor overclocked to a phenomenal 4.8GHz, working in concert with 32GB of 1600MHz DDR3 RAM.

We begin with a couple of runs on 3DMark 11 and the new numberless 3DMark tool, utilising its Fire Strike benchmark designed specifically to test higher-end hardware to its limits.

"The 3DMark test results produce the 50 per cent increase in raw power over the GTX 680 we expected from the specs."

| GTX 680 | Titan | Perf Boost | |

|---|---|---|---|

| 3D Mark 11 Graphics Score | 3059 | 4543 | 48.5 per cent |

| 3D Mark Fire Strike Graphics Score | 3133 | 4586 | 46.3 per cent |

3DMark 11's extreme tests yielded impressive results - a clear 48.5 per cent boost in performance for the Titan, while the demanding Fire Strike test almost matched that at 46.3 per cent. That's pretty much exactly where we would have hoped to see Titan stack up bearing in mind the technological composition of the silicon. However, we were keen to see whether those numbers continued into actual game engines, so we collated a range of software featuring built-in benchmarking tools.

To begin with, we called upon the fearsome Metro 2033, with its GPU-rending Frontline tool. We ramped up every setting to the max and just to make things even more problematic for our hardware, we ticked the PhysX option too.

Across the range of our gaming benchmarks we also tested a range of resolutions too - the GTX 680 makes a good fist of running most games at max settings at 1080p60, so we introduced 2560x1440 into the mix (an increasingly popular configuration for high-end gamers) and added 4K to the line-up too since it is being positioned as the display standard of the future.

"The Metro 2033 bench makes mincemeat of virtually any GPU, but the Titan hands in some impressive results."

| GTX 680 | Titan | Perf Boost | |

|---|---|---|---|

| 1920x1080 (PhysX On/Off) | 29FPS/30FPS | 44FPS/48FPS | 51.7/60 per cent |

| 2560x1440 (PhysX On/Off) | 19FPS/20FPS | 29FPS/31FPS | 52.6/55 per cent |

| 3840x2160 (PhysX On/Off) | Fail/Fail | 13FPS/14FPS | NA/NA |

In our GTX 680 review, the Metro 2033 Frontline benchmark witnessed only minimal improvements between the new hardware and its GTX 580 predecessor. However, with the Titan and the Core i7 3970X working in concert, it's an entirely different story. Across both 1920x1080 and 2560x1440, we see gains in the 50 per cent range - a match for the synthetic benchmarks. When it comes to 4K, performance grinds to a complete halt on the GTX 680. We have the feeling that we were simply over-taxing the capabilities of the hardware at this extreme resolution. 13FPS for the Titan may not sound exemplary, but it's actually pretty astonishing stuff bearing in mind just how punishing this test is.

Next up, two more demanding examinations of the Titan's credentials: we completely maxed out Batman: Arkham City's rendering arsenal, invoking maximum PhysX simulation (for which an entire second GPU is recommended) and 32x CSAA for a meaty workout for the memory interfaces of our test cards. We ran the performance-sapping DirectX 11 renderer with all effects enabled and full tessellation engaged - a set-up guaranteed to give even the most powerful GPU a thorough work-out.

We followed that up with similar tests on the newly patched, Nvidia-friendly Tomb Raider, running at our three target resolutions with the TressFX hair-rendering simulation both engaged and disengaged. Settings were at the default Ultra (TressFX off) and Ultimate (TressFX on), which uses maxes out everything with the exception of anti-aliasing, where FXAA is the default. Super-sampling options were available, but we decided to stay at with the post-process technique here in the interests of giving our hardware a fighting chance.

"Results are all over the place with the Arkham City tests - the 80 per cent boost at 1080p a particular mystery."

| GTX 680 | Titan | Perf Boost | |

|---|---|---|---|

| 1920x1080 (PhysX On/Off) | 57FPS/65FPS | 75FPS/117FPS | 31.5/80 per cent |

| 2560x1440 (PhysX On/Off) | 42FPS/49FPS | 56FPS/76FPS | 33.3/55.1 per cent |

| 3840x2160 (PhysX On/Off) | 13FPS/24FPS | 31FPS/35FPS | 138.4/45.8 per cent |

Some interesting results here, with the Titan registering just a 31 to 33 per cent gain with physics simulation active, the gap rising substantially when PhysX is disabled. We were particularly surprised by the 80 per cent performance boost at 1080p and thought that some level of error may have cropped into the results, but double-checking the benchmark confirmed the stats. At 4K, Titan really flexes its muscles, registering a 138 per cent improvement with PhysX on, though this narrows down to a less surprising figure when it is disabled - once again, the combination of physics simulation and extreme resolution brings the GTX 680 to a juddering halt (the game works fine at 4K on more modest settings though as our recent testing confirms).

More consistent results are seen when the Titan gets to grips with the new Tomb Raider reboot - a beautiful game on PC. The 50 per cent boost we expect returns though we note that TressFX continues to be a major performance hog no matter what resolution we choose. The more realistic, Compute-heavy hair-rendering didn't seem to work properly at 4K res though, with some colour switching afflicting Lara's locks. The development team suggests it may be patched at a driver level by Nvidia but did point out that we're probably the only people playing the game at this mammoth resolution right now. Um, fair point. This may explain why the Titan's 4K results were so much better than GTX 680's - we're really in uncharted territory here with no official support. Alternatively it may simply be the case that the extra memory and bandwidth Titan possesses can handle the extra load where the older card lacks the substantial resources required - as you've probably noticed, poor results and even test fails afflicted the 680 in a number of our tests.

That said, it's worth pointing out that in the past we've achieved some pretty good results with the GTX 680 at 4K - we just had to be realistic with the quality settings, an approach we're definitely not following here in these tests where we're pushing the benchmarking options to their limits. But the results speak for themselves: all strongly suggest that we could hit the current 30Hz refresh of the 4K standard with the Titan and employ much more in the way of lavish effects work.

"Tomb Raider sees a return to the 50 per cent boost we expect from Titan, whether TressFX is enabled or not."

| GTX 680 | Titan | Perf Boost | |

|---|---|---|---|

| 1920x1080 (TressFX On/Off) | 41FPS/61FPS | 60FPS/91FPS | 46.3/49.1 per cent |

| 2560x1440 (TressFX On/Off) | 25FPS/36FPS | 39FPS/55FPS | 56/52.7 per cent |

| 3840x2160 (TressFX On/Off) | Fail/17FPS | 19FPS/26FPS | NA/52.9 per cent |

Gameplay performance analysis

You can never have enough rendering power at your disposal - especially with next-gen consoles around the corner - but the question is, to what extent is it actually useful in the here and now outside of more niche set-ups like multi-monitor "surround" gaming? Does that 50 per cent of extra grunt over the standard GTX 680 actually translate into an improved gameplay experience on the more mainstream single-display set-up? To illustrate the pros and cons of the argument, we put together video analyses based on a brace of the most technologically demanding PC games currently on the market.

We'll kick off with DICE's Battlefield 3. The GTX 680 runs this game at 1080p60 on the overkill ultra setting with just minor frame-rate drops, suggesting plenty of headway for Titan to push the game onto even higher levels. If ultra-low latency gaming is your bag, it's possible to dial back settings a touch and enjoy a full 120Hz experience, which should also mean good things for stereoscopic 3D gaming too. For our testing we'll stick at 60FPS and ramp up to the next level of resolution: 2560x1440. We'll be talking in more depth about these "2.5K" displays in the near future, but suffice to say that prices are dropping rapidly on these screens and they offer a tangible improvement over 1080p for both games and desktop applications. If you're thinking of upgrading your PC gaming display, we highly recommend something along the lines of the Dell U2713HM or the much cheaper Korean imports you can source from eBay.

Here, we're running BF3 through the demanding Operation Swordbreaker stage, with settings at ultra and v-synced engaged. The results are intriguing - clearly the Titan offers a substantial improvement over the GTX 680 in line with the benchmark results but the latter stages of the testing see the gap close with the 60Hz ceiling introduced by v-sync effectively capping the top-end performance of this £800 card - in these stages we actually have a surfeit of processing power available. Crucially though, minimum frame-rates are significantly improved throughout the test, making for a much more consistent, enjoyable experience.

"Want to run Battlefield 3 at 60FPS on ultra settings on your new 2560x1440 display? Titan delivers that raw performance in a single-chip design."

If we're looking for a sterner test of Titan's capabilities, we'll need to go elsewhere. Battlefield 3 is swiftly approaching its second birthday and is ripe for replacement, while on a more general technical level, everything changes this year with the introduction of new console hardware. Virtually all major games are built with scalability in mind, designed to be playable on Xbox 360 and PlayStation 3, but the arrival of their next-gen replacements will inevitably see the technological bar shift upwards - beyond even the scope of Battlefield 3. Indeed, with certain titles such as Far Cry 3 and Crysis 3, we're already seeing the goalposts shifting with even basic rendering demands increasing to the point where older hardware is struggling to cope.

Crysis 3 is Crytek's next generation statement of intent - and, quite simply, the most graphically impressive PC game we've ever played. Side-by-side with the console versions, the computer version is in a completely different league, its highest settings capable of bringing virtually any PC to its knees. Yes, even the Titan in combination with an overclocked six-core Intel CPU struggles to run this game with consistent performance when all the dials are ramped up to 11. To illustrate, in the next video analysis, we're running Crysis 3 across the first two levels of the game at 2560x1440 with the global preset locked at the 'very high' level. We need to be a little careful with anti-aliasing here: Nvidia's TXAA modes incur a huge performance penalty - more so than the higher MSAA settings, surprisingly enough. We settle on the SMAA 2x medium setting, as its impact on fluidity seems minimal and its edge-smoothing coverage is very, very good indeed.

Typically we prefer to run with v-sync engaged in order to maintain image integrity, but on this game, at this level, it's not a good idea: Titan switches between a hard 20 or 30FPS as it locks onto the screen refresh, while the GTX680 is pegged down still further, killing the gameplay experience. Operating with v-sync off produces some unsightly screen-tear (you'll see from the graphs why we typically prefer to run with it engaged on PC titles) but the game is still spectacularly good-looking, and it's at least playable on both cards.

"Crysis 3 testing demonstrates the power of the hardware but also reveals that the Titan architecture has its limits."

Here we see something approaching the 50 per cent boost in performance we saw in the earlier benchmarks, but crucially it has translated across into actual gameplay on the most technologically demanding PC game ever made. It's the difference between relatively smooth, playable action and a sub-optimal experience that isn't anything like as satisfying. The results also illustrate that despite its almost legendary status among the PC gaming elite, the Titan isn't a magical solution for running any game by default at any resolution on max settings. Crytek promised a PC-melting piece of software and while the lower quality settings work fine on most enthusiast set-ups, the very high level is brutally uncompromising. Even the Titan can't get anywhere near 60FPS at 2560x1440 without some degree of compromise in the quality settings.

The sobering reality is that you'll need to SLI two or more of these beasts to fully overpower games like Crysis 3 and the Witcher 2 when they're operating at their most extreme levels and even then you may run into further limitations. Even the original Crysis - in all of its unoptimised, power-sapping glory - drops frames at 2560x1440, and anything approaching 60FPS is only possible at all if anti-aliasing is disabled (though it runs like a champ at 1080p). Titan is indisputably the most powerful single-chip GPU on the market, but it's important to point out that even this rendering colossus has its limits.

Nvidia GeForce Titan: the Digital Foundry verdict

In many ways, the numbers don't add up for Titan. Even though it is by far and away the most powerful single-chip graphics processor on the market, it commands a mammoth price premium that only really makes sense for those lucky enough to live a life where money is no object, or where PC gaming is a core focus.

Certainly in terms of the price vs. performance ratio, the sobering reality is that you're paying 2x GTX 680 money for a 50 per cent performance boost. At the same time, Titan occupies the same price-point as the dual-chip GTX 690 (effectively two 680s built into a single product with just minor clock speed compromises) which runs most key games more smoothly with as much as a 20 per cent increase in frame-rate, depending on the game . The single-chip solution is significantly cooler and more power-efficient of course (it's also remarkably quiet) but the chances are that if you can splash out £800 on a graphics card, you can also afford a meaty power supply and a sound-insulated case. If we had the time and resources available, we'd have liked to have compared the Titan's capabilities against two GTX 670s running in SLI - we have a hunch that gameplay metrics would be quite similar and you'd save £250 into the bargain.

"A plaything for ultra-hardcore gamers and the fabulously wealthy, Titan's value proposition is on shakier ground for mere mortals. We love the fact that it exists, but twice the cash for 50 per cent more power isn't the best deal."

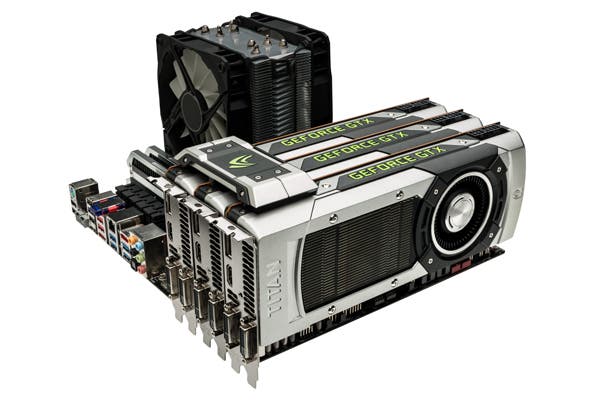

Where the Titan really comes into its own is in Compute performance (look no further if you want to build your own super-computer from consumer parts) and scalability - you can daisy-chain three of these monsters together to create the ultimate, untouchable gaming PC. Now, the basic idea of splashing out £2,400 on three graphics cards alone might seem insane, but it begins to make sense in the world of the ultra-hardcore where Alienware sustains a lucrative business selling gaming laptops for much the same money. Another potential avenue where the Titan could be the preferred choice is in integrating the high-end rendering or Compute tech into a smaller form factor PC - that's an avenue simply not open for SLI or GTX 690-based set-ups which are too large, too hot, and too power-hungry.

But returning to the real world, while Titan prices stay north of £800, it's difficult to recommend this to anyone other than the most affluent, hardcore, dedicated PC gamers around. That said, we have a feeling that this isn't the end of the Titan story and reckon that there's a good chance that more consumer-friendly versions of the tech will appear. The GK110 silicon that powers the Titan is a new design and with production yields being what they are, Nvidia must surely be stockpiling imperfect examples of the chip destined for cheaper cards. This is exactly what happened with the GTX 670, identical from an architectural standpoint to the 680, but with the defective areas of the chip deactivated. We saw how good the GTX 670 was in relation to the 680, so hopes are high that we'll see a similar level of scalability with GK110, perhaps restoring the price vs. performance ratio to something more appealing to the more value-conscious end of the enthusiast community.

In the here and now though, Titan is a unique, remarkable product - an indulgence for the wealthy few for sure, but a serious statement of intent from Nvidia on the future of rendering technology nonetheless. It's difficult to make a case for actually buying it unless money is no object, but the fact that it exists at all is somehow quite wonderful.