John Carmack: Harbinger of Doom

"Games will look like movies," says the Id development legend in the first part of this fascinating account into his keynote speech at this year's GDC...

One of the undoubted highlights of this year's Game Developer's Conference was John Carmack's hour long keynote address at the San Jose Civic Auditorium. Billed as "A candid look at the issues and rewards of bleeding edge engine development," Carmack didn't disappoint, giving a rare and unique insight into the challenges facing Id Software and other bar-raising developers in today's demanding era of increasing realism, and the associated issues with lengthening development cycles, ever increasing team sizes and of course costs.

Reality Bites

"There's much that still separates the render from reality. There was an old saying many years ago in computer graphics that reality is a million polygons a second. That's clearly not the case because we're today rendering a million polygons a second in many cases and we're still a long way from what you would call photo realistic.

"But there are things that we can actually look at improving here - we're probably a factor of a hundred off from real world in terms of real world player object complexity. A typical crowd scene in a game might count a couple of dozen. [In real life] you're looking at thousands of people, and you look at all the tiny things we're used to abstracting in games.

"A cluttered desk in a game might have a couple sheets of paper, a coffee cup, keyboard and monitor, wherever you look at most people's offices there's going to be a couple of thousand discrete little items there. So there's a clear path that we'll take on increasing the complexity of worlds.

"There will be probably another factor of ten that we'll just crank up in terms of the normal things that we do today in games that we want to go get and bring right up to those levels where everything is at very high resolutions and very high frame rates, very high colour depths. But another factor of 100 or so will probably come in in place of what we do, and we can then do high quality surface rendering, we can do a really cool job and hopefully handle the intractable problems like soft shadowing and motion blur and doing really good reflections throughout the world and necessarily simplified assumptions."

Reality: from four and a half hours to 60 times a second...

"But it's still on the graphics side of things you look at it, and a factor of a million is basically doing at 60Hz what takes four and a half hours to do now. And of course four and a half hours has always been what a rendered frame takes, no matter what computing power you've got.

"It'll be interesting to see if people are able to suck that up in the offline side of things there. But still, it means that people can - quite credibly with no huge leaps of imagination or breakthrough technologies - expect to eventually be rendering Lord Of The Rings rendered quality TV graphics in games in a decade or more from now.

"But there are several issues that we're seeing there in the offline rendering world which we will have to put a little more [thought] into. In Lord Of The Rings, every frame has basically been gone through and tweaked dynamically with an artist's eye - and that's something we're unable to do in a real-time situation, because we have more flexibility than that.

Too much freedom, too little power?

"But the problems that a lot of gaming has related in the offline [rendering] stuff is very much one of being able to give the player the freedom to move around without losing the ability to completely control the experience there. Certainly different types of games will be done quicker than others. When you've got a third-person constrained view you're going to be able to put in most of that level of detail and sophistication and games will look like a movie. A third-person rendered game where you have a director's perspective moving in there. I have very little doubt that that will be solved with the quality level of looking like a movie.

"First-person games where you've got complete freedom are a little bit harder - a lot harder in some ways. The actual fidelity of light to render on there [for the immersive experience] is something that requires a little bit more in the way of advances in I/O devices where, interestingly, resolutions where we're at right now - if we had the proper display technologies - are actually perfectly acceptable to render reality if we had retinal scanning and direct retinal imaging. If we have to use a primitive imager for the entire thing we might need as much as another factor of 15 in terms of pixel rates to go ahead and deal with the complete level of 'look around' in [deep] immersion.

"But some of the things that make it even more difficult are when you start looking at giving the ability to people to look at whatever they want, with whatever camera they want. This then brings up the whole issue of things that are tweaked and massaged in the offline rendering world that need to somehow come across automatically and convincingly. And then that leads up to a couple of the problems which we really want - so much - [to be] nearly done in what we're doing in computer games.

When it's 'done'

"Another one that basically could be done right now is audio. If anyone wanted to spend all the resources that we have today in terms of using the GPUs for and all the floating point processing power, audio could be done. I mean we could go ahead and have all the radiosity style sound transfer sound environments, and very complex environments. You could certainly have the sample rate and bit transfer for all of that. But it just doesn't pay off in the current generation. But in a couple more turns of processor generation audio will just be 'done'.

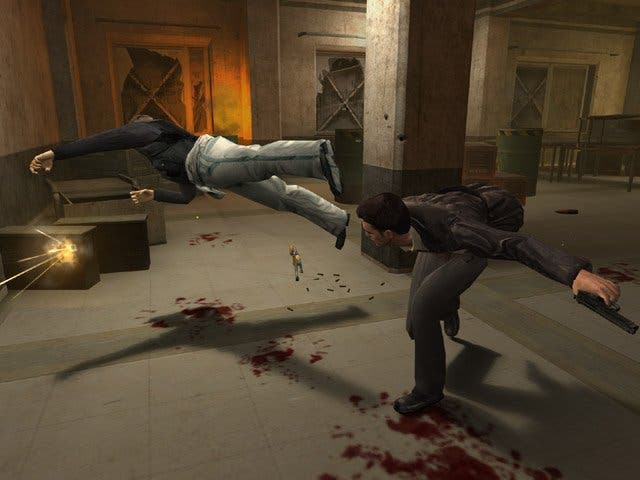

"Now, things that aren't done: Physics simulation. This ties in with that and the tweaking of all the render views, where we're much, much closer to being able to render exactly what we want, than we are to being able to simulate exactly what we want.

"In many cases physics is still one of those things where everyone has a physics engine now and they all sort-of kind-of work, but everybody knows pretty much what you do to go ahead and break most physics engines. We're still doing basically trivial things. Y'know it's cool to have little boxes bounce around and knock off of each other and land in different orientations and rag doll the character, but when we start looking at what people will be experimenting with in current and future game generations - there will be simulations of weather, simulations of liquid, simulations of dust motes going through the air and transferring through the environments, and all of these are interesting, challenging problems that we can get some mileage out of.

Gaming gets academic

"It ties in, kind of interestingly, with something that is happened in the games industry in the last decade, in that a lot of things that were academic pursuits are actually really relevant to what we're doing now. 10 years ago, gaming had nothing whatsoever to do with what people do academically with simulation or graphics, what you were doing was basically all implementation level things. The trickery was in figuring out how to do something clever that makes some implementation thing... y'know, small integral factor faster.

"But now with the power and the fact that not everything is nailed down so hard in terms of exactly what fractions of the CPU need to go to these things and what's left over for other things. We've seen it certainly in the last five years, a huge amount of beneficial crossover from the academic graphics research world into computer graphics, because now what people do for a college thesis or something has direct applicability into what you could use in a computer game.

"So physics rendering is something that is still going through a lot of that - and that's not my speciality - but it's one of those areas that you can look at and say 'this is going to be a very exciting area for research that will be important' as we start more or less polishing off many of the graphics side of things."

Tune in tomorrow for Carmack's thoughts on the challenges of Artificial Intelligence, the frustrations of lengthening development cycles and how remaking Id's back catalogue with the Doom III engine was considered as a solution to it...