Like animals, video game AI is stupidly intelligent

Intelligently stupid.

We tend to think of real and virtual spaces as being worlds apart, so why is it that I can't stop seeing an octopus arm in 2007's spectacular Dead Space 'Drag Tentacle,' the alien appendage of developmental hell? Beyond surface xeno-weirdness, it's what clever animation and the neural marvel have in common that has me interested. Since an octopus arm is infinitely flexible, it faces a unique challenge. How do you move an arm to set x,y,z coordinates and a certain orientation if it has infinite degrees of freedom in which to do it? How might the octopus arm tackle its virtual cousin's task of going to grab the player when they could be anywhere in the room - free even to move as the animation is first playing?

You simplify. The former Dead Space developer and current senior core engineer at Sledgehammer Games, Michael Davies, took me through the likely digital solution. The drag tentacle is rigged with an animation skeleton - bones to twist and contort it so animation/code can bend it into different shapes. A trigger box is placed across the full width of the level Isaac needs to be grabbed from, with a pre-canned animation designed specifically to animate to the centre of it. Finally, to line up the animation to the player, inverse kinematic calculations are done on the last handful of tentacle bones to attach the tentacle pincer bone to the ankle bone of Isaac, while also blending the animation to look natural.

The octopus, conversely, constricts any of its flexible arms' infinite degrees of freedom to three. Two degrees (x and y) in the direction of the arm and one degree (the speed) in the predictable unravelling of the arm. Unbelievably, to simplify fetching, the octopus turns an infinite limb into a human-like virtual joint by propagating neural activity concurrently from its 'wrist' (at the object) and central brain and forming an 'elbow' where they meet - i.e. exactly where it needs to be for the action.

So what's the 'exciting' parallel? The octopus arm is doing the natural equivalent of a pre-canned animation - outsourcing the collapse of degrees of freedom to its body so that it doesn't have to rely on a central brain that wouldn't be able to cope. Similarly, the drag tentacle leans on an animated skeleton to collapse degrees of freedom like a human arm, but also pre-canned animation à la octopus, and only directly tracks the player and blends its animation at the last moment - outsourcing to the 'body' of the animation and 'behaviour' of the scripting.

And it's not just these appendage cousins. A virtual world having to be encoded and nature having to encode and navigate the real world are both fundamentally about simplification.

The only Go champion to ever score a win against Google's Deepmind 'AlphaGo' AI recently retired, declaring AI an entity that simply 'can't be defeated'. And yet, according to researchers, even the most powerful neural networks share the intelligence of a honeybee at most. How do you disentangle these statements? I have to bet that if any one contingent of the population is most skeptical of the potential dangers of AI, it's people who play video games. We're hobbyist AI crushers. No article on how humanity was only placed on this Earth to create God's true image in AI would ever convince us otherwise. After all, how can gamers be expected to shake in the presence of these neural network nitwits when we've been veritably cosseted by the virtual equivalent of ants with guns?

Yet pouring water on the prospects of AI now or at any point seems foolhardy. 2011 only just saw the deep learning breakthroughs that have now seen translation and visual/audio recognition advanced to and beyond human capability. Such advancement may manifest day-to-day for the moment as little more than AI-generated auto replies to my girlfriend helpfully offering 'no' or 'nope' in response to whether I'm having a good day, but the application to research is endless. They can rediscover the laws of physics, reveal what Shakespeare did and didn't write, and predict when you're going to die. As a subset of machine learning, deep learning neural networks can be trained on data sets until they reduce their errors enough that they can accurately generalise what they've learnt for novel data. With layers of 'nodes' loosely analogous to our own neurons, these algorithms are powerful, if fundamentally not 'intelligent' tools. They employ an incredible level of pattern matching in place of semantic understanding (although the field isn't without efforts contrariwise). It's controversial for some to call them AI at all.

Yet, in the gaming space we've had the dramatic developments of the fight for human supremacy seeming definitively lost on the battlefield of the game of Go (the more mathematical alternative to chess) in 2015 to Deepmind's reinforcement learning program, AlphaGo, with technically mindless but 'creative' flourishes. And then the salt being veritably rubbed in when Deepmind's AlphaStar became a Starcraft II grandmaster capable of eviscerating 99.8 per cent of players - as I was writing this feature no less. No AI article will ever be up to date. Again, this isn't necessarily as impressive as the hype it generates. If anything, it's AI's blind proficiency that makes it potentially dangerous. It doesn't have to be conscious or even particularly intelligent to be better than you at discrete tasks or effectively hurt you through weapon systems and social media and search algorithm filter bubbles. As with the atomic breakthroughs, never bet against science's potential to better and/or ruin your life.

I think what bothers me most about AI discussions is some of the absentees. Whilst we're doing our best to expunge this planet of all other company, we aren't quite alone in a room with AI yet. AI is often referred to as if it's our one chance at meeting our equal outside of ourselves and yet evolutionary theory has shown us that the entirety of the animal kingdom is in fact one big family tree. Within animals is everything of what we are. The building blocks of higher cognition are preserved within living exhibits all around us - nothing just materialised within humans suddenly and apropos of nothing. And what of lowly video game AI? Are there no benefits to its approaches?

Defining intelligence is plagued by the inherent bias of it being us doing the defining. As Jerome Pesenti, VP of artificial intelligence at Facebook, says of DeepMind and OpenAI's efforts towards an artificial general intelligence (AGI), it's 'disingenuous' to think of the endpoint of an AGI as being human intelligence because human intelligence 'isn't very general.' We're enamoured with it as a differentiating factor, but by many a measure we can be beaten out by those we dismiss. If intelligence is defined by information processing and how quickly we can process high volumes of information, pigeons rule the roost. Learning speed? Human infants are bested by bees, pigeons, rats and rabbits. How exactly do you make a test ecologically neutral between an infant and a bee? Most often you can't - except perhaps in visual tests.

The overwhelming point, however, is that you can't define humanity's unique traits as intelligent and grind the rest of the animal kingdom into dust. All behaviour that has survived must be to some degree de facto intelligent if they all effectively achieve their objectives like an Alpha algorithm. Just as popular culture's depiction of linear evolution is a falsity (we are all equally evolved on this earth apart from *insert politician's name here*), so is it often true for intelligence. Intelligence is therefore only a rough approximation of the complexity of a natural/virtual agent's objectives that are fulfilled, but evolutionary solutions in behaviour and bodies are also intelligent. Even if we define intelligence on the basis of how much prior information is needed for the acquisition of a new skill, to what extent is it that our bodies and behaviours factor in? We are all incredibly versed in what pushing a human cognitively looks like - do we know fully what that means for most other animals on the planet? Small brains often just have to find alternative means to achieve their objectives; often by leaning instead on the animal's environment or body for a solution. Think of the perfect circle formed by a scorpion or spider's legs. Detecting vibrations spatially is simplified to a matter of which leg the vibrations reach first. No complex computation necessary.

The key to any investigation of intelligence is that the approach is bottom-up as opposed to top-down. This applies to animal studies. Instead of seeking human-level speech or numeracy in dolphins or tool-use in bees and proving next to nothing, we can ground experiments in analysing how dolphins actually communicate or count in their lives. We can work out what a reasonable test of novel skill acquisition looks like for their toolset. We can look to animal cognition and try to find the evolutionary roots of such abilities in an ecologically valid way.

It applies to AI. The development of deep or reinforcement learning algorithms that accept no top-down imposed-rules, but autonomously train themselves by means of networks that resemble our own neurons inherently has great potential insight to lend on how our brains work. The only issue we now see is that the gaps in data that AI combs off of Google or even scientific data are effectively society wide top-down provisions that invariably bias AI against minorities and women. It's just another way 'reference man' might further plague society. Then we have bioinspired robots, that by dint of being situated in an ecologically-valid environment and taking biological inspiration for their bodies, can actually shed light on how and why animal behaviour, and by extension our own, works.

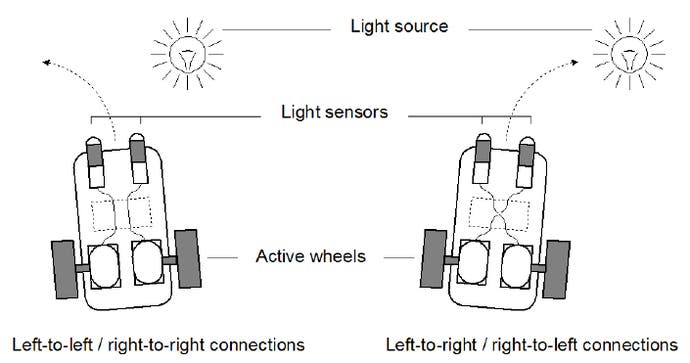

Enter video game AI - a curious thing. By not exercising the muscles of the very latest AI research it's left in a place that is frankly fascinating. Evidently fascinating to a large contingent of gamers too, if excellent resources like the YouTube channel, AI and Games is anything to go by. Like the exhibits that buzz around us, developers often leverage much the same strategies evolution has employed to solve intelligence in small-brained animals. However, the term I'll be borrowing for the closest description of video game AI agents was coined by Valentino Braitenberg in his 'Vehicles, Experiments in Synthetic Psychology' way back in 1984. Braitenberg machines are simple thought experiment vehicles, a car for example, with simple reactive sensors responding perhaps to light driving the wheels. Given only the barest of increases in connection complexity between the wheels and sensors, a complex environment and several stimuli present and the vehicle will appear, for all intents and purposes, an intelligent, thinking being. Its behaviour is motivated, goal-oriented, dynamic and adaptive to changes. Yet, underneath it all, there is no processing, no cognitive processes in memory or reasoning - nothing. This, at least in part describes what a small-brained insect running on just innate behaviour is. Given enough additional connections, could it even describe humanity with a consciousness cherry on top? Additionally, Heider and Simmel with their 1944 experiment wherein subjects were shown an animation of simple geometric shape tragedy demonstrated that as social beings our natural inclination is to irrationally project agency, social behaviours and intentions onto things that don't share our capacities. The problem of AI for gaming is already half-solved by our social intelligence alone. Combined, Braitenberg vehicle-emulating AI systems and our overly-emotional brains produce an irresistible illusion.

What I've grown to love about games is that as engine-run simulations they are often forced to solve scientific problems bottom-up and in bioinspired ways. Whatever complexity it's given, video game AI has massive advantages over AlphaGo/Star and their ilk purely by having bodies/animations that are situated in a virtual environment. 'Situatedness' refers to the fact that as agents we only ever exist in the context of an environment and a body. Thus, no natural complex behaviour has ever emerged without a body interacting with an environment - a brain-body-environment interaction. Being situated in an environment with other conspecific (same species) agents demanded complex social behavior that drove both brain evolution and intelligence in primates and birds (the social intelligence hypothesis). Indeed, Anil Seth argues that consciousness itself is the result of self-sustaining, surviving bodies more than intelligence. Far from the popular culture concern that your phone will one day gain consciousness, it's hard to conceive that a complex, yet formless, lonely and thriving AI could thus ever share our suffering.

It's easy to be negative about the lack of progress in gaming's AI systems, but a whistlestop tour, whilst showing some impressively long delays between theory and implementation, also has a handful of significant advances. Finite state machine (FSM) systems were first based on research from 1955, way before they saw their popular implementation in everything from Pac-Man to the more complex Half-Life 1. It wasn't until 2005 that Goal-Oriented Action Planning (GOAP) successfully introduced agent planning to FSM game AI in F.E.A.R. Even so, the underlying research sees its origins in the 70s! More recently we've seen everything from the enhanced hierarchical finite state machines (HFSMs) in Wolfenstein New Order and DOOM 2016, and the more vigorous advances in AI behavioural trees in Halo 2 and 3 and hierarchical task networks (HTNs) in Killzone 3 and Horizon Zero Dawn. We still see the oldies persist too with FSMs used for the Arkham games and GOAP used for Deus Ex Human Revolution. There's no one size fits all method. Whilst the lack of mass migration to any one system seems astonishing, the selection and modification of AI systems on a game-by-game basis to fit the niche of a game's requirements is one of the medium's greatest strengths.

Every game can be a new opportunity for ingenious new solutions that befits their design - even if they're not making use of the latest HTN planner. See DOOM 2016 and its seemingly outdated use of HFSMs with all their drawbacks, but also its ingenious inversion of the AI cover system of RAGE. Instead of seeking out cover, it seeks out an open position near cover to maximise visibility to the player and enhance the combat flow. It's certainly not traditional intelligence. The usual survival pressures have been flipped on their head to create agents that have a deathwish. It's not an advancement in computation, it's just clever behaviour emerging from simple rules to fit the niche of the game. Is video game AI not quite like our animal and algorithmic friends in being entirely fit for purpose in this way? Intelligently stupid?

Whilst gaming is appropriated as the next problem to have neural networks solve whilst in the shoes human players would usually don, the appetite for creating robust virtual agents with the sharp edge of progress isn't there yet. The question is, would we want it? It's tempting to just riff on the past and suggest we might see 2011's deep learning advancements become mainstream in 2040, but what we'd be contemplating is games utterly transformed from the purpose-led design of today to something both outrageously resource-intensive and wholly unpredictable. If game designers currently use what's tantamount to intelligent design to create agents - carving their behaviour to a specific game title's niche - perhaps deep learning algorithms would be more like guided evolution. In many ways the hand of the designer and artistry is lost. Would it even yield gaming improvements?

Conceivably. Consider the recent AI Dungeon 2 text adventure game that uses OpenAI's deep learning language models to respond to any input. Whilst it's not perfect, there's something joyous about one of the most infamously inflexible gaming genres becoming infinitely so. There are also the endless possibilities of deep learning generated animations and environments - even whole games. Online toxicity could be a thing of the past. As for behaviour, whilst they probably wouldn't yield intelligently stupid solutions like those employed by our deathwish demons, what if deep learning techniques were kept in their own lane? Having discrete AI systems that could benefit from deep learning like experimental reactive dialogue could conserve elsewhere the creativity of the video game AI of today. Otherwise, games might have to experience a complete paradigm shift - evolve with their agents - to even make it work. Can you also ensure it's not just the preserve of those with resources?

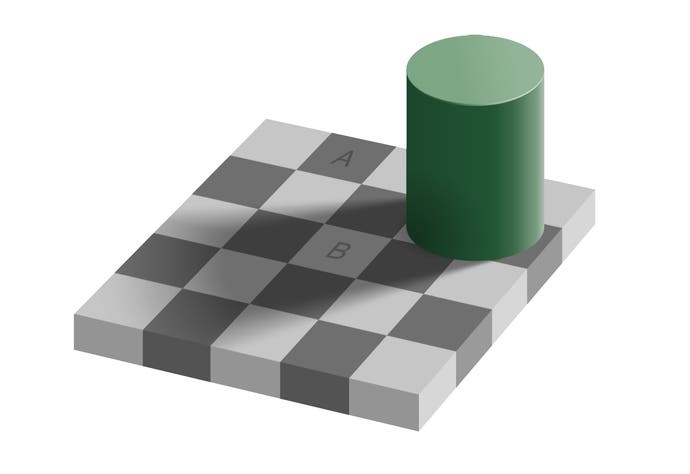

Simple vehicles or not, there are some beautiful, humbling parallels in how we as human beings and game AI fundamentally work. The American psychologist, JJ Gibson, who pioneered ecological psychology argued that far from amazing world-processors, our brains contain 'matched filters,' neurons that are tuned into the frequencies of and resonate with our natural environment by directly extracting information from the world. Essentially, much like an Apple product (given we are nature's product), we thus have all the proprietary ports for which our environment can readily slot into. In possession of the most complex object in the known universe or not, we simply don't have the processing power spare to generate an entire internal model of reality. However, we can recognise the parts that we evolved to by being afforded them dynamically. These include filtering for textures, geometry, facial recognition and reading, movement, biological motion (natural-looking motion), folk physics (our innate notions of the rules of nature) - just to mention a few. All animals have their own. But, expert sensory filterers though we are, it's worth pointing out that perception is the result of the arrow in the opposite direction too (brain outwards). The below optical illusion will have you perceive A as darker than B because your brain predicts a shadow from the object. Connect them with your fingers and you'll find they're the exact same shade. What easier way to filter reality than to project expectations - hallucinate it?

So where the goal and object-oriented lives of a soldier from 2005's F.E.A.R. might have looked a thousand miles away from our own, so too are they constructed by designers to resonate selectively with their environment. Quite pleasingly to me, F.E.A.R.s agents have short but frequent plans with an average of less than three actions that they plan to execute. Pac-Man ghosts have only single action plans! This is compared to a potential thirty actions in an HTN. Whilst I understand that these hierarchies of strings of tasks allow for faster, more varied, more adaptive agents, there's a purity to the ultra reactive F.E.A.R. In a small way, it feels more in keeping with our imperfect reactive brains, whilst in both cases being due to our different kinds of memory limitations. The eye-mind hypothesis suggests that for us there is no appreciable lag between what we visually fixate and process. You acquire info when you need it and minimise any use of memory. When you're walking, you fixate ahead of you to deliver the motor information for the required thrust to your grounded foot. VR tests too can demonstrate our 'just in time' computation. When colour/size categorising and moving objects onto a conveyor belt, subjects suffer from change blindness with dramatic object size and colour changes being entirely missed when subjects have already moved on to and fixated on the belt. Animals, AI and humans - we are all reactive agents.

Consider the sad existence of a F.E.A.R. soldier. He's nothing but an algorithmically moving animation blind to everything in the world but pathfinding navmesh nodes, 'SmartObjects' and the player - but then who are we to talk? It's amazing to think how visually and cognitively blind we are outside our ecological resonances to everything in the world. Unlike a simple FSM approach, he's a flexible Braitenberg vehicle whose sensors dynamically switch him between behaviours without any set transitions. Interestingly, what he's sensing doesn't comprise light or heat or even his fellow squadmates, but the very abstract, heuristic 'level of threat.' This gives us the illusion of some self-preservation as he moves to cover, dodge rolls when aimed at or blind fires when shot at. In truth, there's nothing behind the eyes - only sensors driving wheels, or, in this case, flexible behaviours. You could conceive of the not-so-easy switch to an AI that senses more natural stimuli and the addition of some deep learning stand-ins for memory and reasoning ability, but it's amazing to think of the complexity gap between those propositions and yet how effective the former solution is. It simply writes itself that the exact same AI system is shared by twenty or so rats in the world at any one time - mistakenly left on in perpetuity in the background to hog resources as you play. The soldiers are really no more complex than the rats they step over.

Algorithms that efficiently handle pathfinding aren't unlike an ant's toolkit, only with less complexity. For a set of coordinates, the A* algorithm optimises a path to a goal by splitting the difference between a path formed from chaining the lowest cost path states and a long-term considering path based on the lowest heuristic values (e.g. how far any next path state is from the goal). Given a living being can't be handed coordinates directly from 'God', they too have to rely on simple, robust and some rule-of-thumb heuristic solutions to cope. Ants use an in-built pedometer and in-built compass using the sun as a cue to take a direct path back to their nest after foraging (path integration) whilst also continuously learning simple views (based on shapes) of the world that they can tend towards replicating when rewalking a familiar route. Travelling further from the nest increases uncertainty, so it's thought that, much like the pathfinding algorithms, they use heuristic values to optimally weight their methods. This negates the need for actual 'certainty calculations' in a small-brained animal. However, even on an entirely familiar route an ant has used for its entire life, if you were to pick them up when they are nest-inbound with food and move them to where they'd usually be nest-outbound without food they'd freeze like an Alien from Aliens: Colonial Marines. With all their robustness otherwise, why? Whilst goal-oriented like a F.E.A.R. soldier, they are more rigidly compartmentalised in how they approach their goals. If you teleported a bot holding the flag in any game's game of capture the flag across the map, it wouldn't make a blind bit of difference. In this case, extraordinarily, ants almost have the same kind of inflexibility of earlier game AI with FSM-like inflexible transitions between their behaviours. They simply can't access memories for the outward route whilst holding food. Whilst having to do so much less, the simple flexibility of game AI appears more intelligent. With the benefit of spatial cells in humans, we're unlikely to become so navigationally unstuck, but our experience of conditional, prompted memories isn't so unlike the stranded ants.

Perhaps the biggest spoiler of some semblance of individual agency in most games is the existence of some necessary coordinator/director/overlord AI systems. These exist behind the scenes whispering secrets agent-wide when ideally they could all be managing on their own reactively. It's the illusionary theatre performance nature of video game AI. By far the most most impressive trick in F.E.A.R. is how in spite of being completely blind to one another, a soldier committing to an action (e.g. flanking) has the 'squad coordinator' feed the dialogue to another soldier to suggest the first do the said action its already committed to! The coordinator goes over the heads of the individual agents to use them for a simple but effective illusion of communication. Horizon Zero Dawn has 'the collective,' which manages the distribution of the machine fauna in their herds. Managing a lot of agents as a well-designed, but loose collective just makes sense. What's interesting is how these systems act in place of the senses of the agents. The director of Alien Isolation particularly comes to mind in how it drip feeds information including the location of the player to the Alien AI in place of a completely grounded agent. It's like a Braitenberg vehicle receiving signals from an omnipotent system to enhance its compliance to expected behaviours. The behaviour is emerging from the ether in these situations and not the environment. How might deep learning approach these visitations from 'God?' Indirect communication in a collective isn't entirely divorced from reality, however. Bee foragers assess the state of their hive by how long they have to wait to have their pollen unloaded by storer bees. It's a gross inefficiency - they could just store it themselves. Without any conscious decisions being made, a force outside of themselves in the dynamics of their collective organisation is allowing them to communicate information by independent discovery. The behaviour is intelligent so that the bees don't have to be.

Behaviour is intelligent. Whether its produced by small brains or big brains in many ways is inconsequential. Deciding the next step in video game AI might be a matter of control. There's a fascinating Quake 3 Arena story about a gamer leaving the neural network-based bot AI to fight it out for four years, only to return to a ceasefire. Fascinating for several reasons. One, it's completely false. Two, people sufficiently believed from their contact with AI as it stands that it could be true. Three, it's an interesting but completely adverse game outcome that you could easily conceive bottom-up AI of delivering. Why would you want it? But, and I can make this case passionately, in many ways video game AI of today is not inferior or less true to life than neural networks. They embody essential truths of nature and intelligence; that nature tends towards solutions that simplify; that small brains or indeed brainless vehicles can see intelligent behaviour emerge from the situatedness of their bodies interacting in environments they resonate with.

Perhaps the real future is presentational. The Last of Us 2 is adopting elaborate systems that further any illusion of intelligence by giving inter-agent recognised names and personalities to its vehicle husks. Whether we ever stop virtually burning them with magnifying glasses or not, let's hear it for the ants of our favourite pastime. Intelligently stupid as they are, they might be as real as it gets.