Spec Analysis: Project Morpheus

Digital Foundry on what we know about the hardware and how it could host triple-A gameplay.

It's been a long time coming, but Sony has finally revealed its take on the great virtual reality dream. Hot on the heels of Oculus Rift, Project Morpheus can be seen as mainstream validation of VR and the first serious attempt at bringing it to a home console since Sega's aborted 1993 vintage headset. In theory, PlayStation 4 is the perfect home for virtual reality, too - Sony has the most powerful console GPU, a ready-made 3D controller in the form of PlayStation Move and a wealth of developers with direct experience creating stereoscopic 3D games. Not only that, but it also possesses a remarkably talented R&D team.

But while the mainstream access and the surrounding ecosystem are unrivalled, to what extent can Sony match the cutting-edge technology offered by Oculus Rift? The advantages of the PC for a pioneering new gameplay experience are numerous: as a completely open platform, anyone can buy the kit and experiment, from indie developers to hackers and to major game publishers. Not only that, but both the VR hardware itself and PC rendering technology can adapt and evolve more quickly. Already there has been discussion of 4K VR displays, and even that might not be quite enough for the optimal immersive experience. In working with a fixed architecture and with limited scope for enhancements, Sony has to get Morpheus right on its first attempt.

We see console VR as a major challenge for Sony on two fronts: hardware and software. On the former, what is clear is that Sony has done everything right based on the tools and technologies available. By most accounts, the Sony VR prototype is a very, very close match to the specs of the second-generation Oculus Rift dev kit. Screen resolution is the same, sensor frequency is a match and a similar system of using an external camera to check positional movement is integrated. There are just a couple of areas where Sony needs to improve - the 1080p LCD screen suffers compared to the OLED display in the second-gen Rift, while some confusion surrounds the 90-degree field of view and how that stacks up against the 110 degrees found in both iterations of the Oculus Rift.

The Verge's eyes-on report suggests that the immersive effect of Morpheus aren't as impressive as Oculus, but as Sony's Anton Mikhailov told Eurogamer in an interview due to be published this weekend, there is no current standard on VR specifications, so perhaps the field of view comparison isn't quite as cut and dried as it seems.

"Is the difference diagonal or horizontal? That's the key there - diagonal is basically 1.4 times the horizontal. Ours is 90 degrees horizontal. If you do that calculation diagonal it's over 100, or somewhere - I think it's quite complicated doing the maths because the optics we're using are fairly non-standard, so I can't give you an exact answer. But it's certainly far above 90," Mikhailov told us.

"Because this is the wild west of VR, we don't have a standard way of measuring things. When you buy a 46-inch TV, you know they mean diagonal, not horizontal. If we'd like to compare specs, we need to get a very clear spec in line. And actually optics are even more complex than that - you know, for the head-mount displays it's a little strange because the aspect ratio might not even be 16:9. What you really want is a vertical field of view and a horizontal field of view. Diagonal can be kind of misleading. It gets complicated, and the numbers range wildly - basically we can quote numbers between 90 and 120, depending on how you want to talk about it.

"Another thing is glasses and eye relief. When you get closer to the optics in VR displays, you get a wider field of view. So if you're quoting a number that's at the lens, that could be quite a bit wider. The specs we quote is 90 degrees field of view for a glasses wearing person at 15mm eye relief or further. So it's a very specific spec."

What is heartening is that Sony's prototype design is hugely improved over its existing virtual reality personal viewers, and that having approached the same set of problems as Oculus, it has come up with a solution that is, by and large, extremely similar to the second-gen Rift dev kit. There are even some additional bonuses: unlike the PC kit, the audio is standardised with an innovative virtual surround system, something that Oculus is currently leaving to PC owners to figure out for themselves.

While the spec is still in a state of flux, it's likely that the 960x1080 per eye resolution will remain in place for the final version, and based on our experience with the Oculus Rift, something of a reality check is probably in order in terms of what this actually means. On a traditional game, that entire screen area occupies most of your focus, but on a virtual reality set-up, the resolution has to do much more - it stretches out to encompass your entire field of view, including peripheral vision. So, on a basic level, far fewer pixels are spent on the areas that your eyes are actually focusing on.

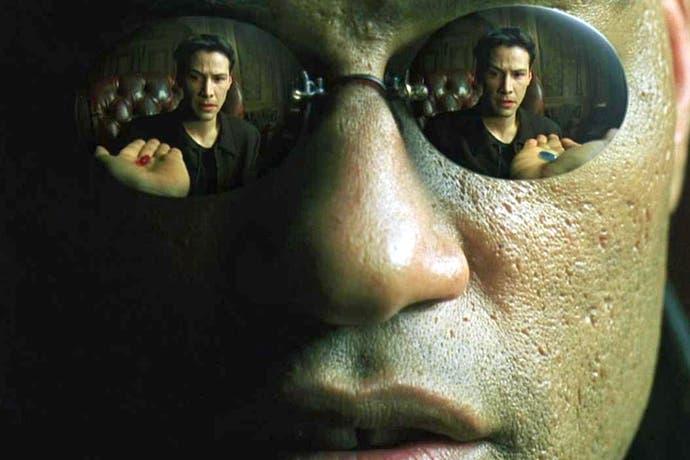

Here's how the original Oculus Rift distorts an image. Here we see 640x800's worth of resolution per eye, but the actual main viewable area is much, much lower.

"Resolution in VR needs to be put into perspective - literally. A large amount of screen real estate is spent on immersion, which can lead to low-res imagery where the eye focuses."

So, based on this way of presenting VR material - likely to be at very similar on Morpheus, but dependent to a certain degree on how the optics are set up - only around 30 per cent of the 960x1080 per eye is spent on the area of the screen where the eye focuses most. Even on a final shipping product, resolution could be an issue and expectations should be managed on how this will translate into the actual gameplay experience.

On a software level, the situation is also complex. In its presentation, Sony outlined six key challenges in providing a state-of-the-art VR experience: sight, sound, tracking, control, ease of use and content. However, on a pure technological level, we're going to focus on a few of our own: stereoscopic rendering, image quality and performance.

First of all, virtual reality demands stereoscopic rendering, an additional system load that saw significant frame-rate drops and resolution reductions on PS3. Things will be different for PS4: additional fill-rate isn't required because Morpheus is still rendering the equivalent of native 1080p (PS3 saw its native 720p doubled). However, geometry processing is an issue: to produce a true stereoscopic image, the view needs to be generated from two distinct angles, incurring a level of overhead that as yet remains unknown. What we do know is that the AMD Pitcairn architecture on which the PS4's GPU is based does have formidable geometry processing onboard, and the one PS4 title that does support stereo 3D - Frozenbyte's Trine 2 - internally runs at 1080p at 120fps with only minimal compromises over the 2D version.

However, a very real issue facing developers is image quality. There's a massive difference between games played in the living room or on the desktop compared to the VR experience - where the screen is literally centimetres from your eyes. We've seen a lot of rendering techniques that look great at a distance, but wouldn't really work very close up. Let's take anti-aliasing for example. VR thrives on very high levels of multi-sampling AA to eliminate jaggies - but the problem here is that the technique isn't used in console video games any more, with developers opting to deploy GPU resources and bandwidth elsewhere.

"In working with a fixed architecture, Sony lacks the agility of its PC competition at Oculus - it has to get Morpheus right on its first attempt."

Instead, we see post-process equivalents like FXAA, which work fine in a standard gaming environment, but are far less effective viewed close up. Even the most advanced post-processing AA - such as the wonderful SMAA T2X found in inFamous: Second Son - won't really hold up when viewed at nose-length, even factoring in the much smaller screen. In short, if artefacts are noticeable playing in a standard gaming environment, the effect will be considerably amplified on a VR display. Now, what is interesting is just how little of the actual rendered frame buffer is a point of focus for the eye - we can't help but wonder whether so much rendering power needs to be dedicated to the areas of the screen which are relegated to peripheral vision. VR pioneers working on the Sony platform can optimise on a granular level, knowing that what they're viewing in-house will be identical to the experience at home.

Certainly, creating a viable VR experience is no mean feat. Attempts to simply graft on support to existing games with the aid of the Oculus Rift dev kit typically result in an effect that is promising (Mirror's Edge, for example) but some way off an experience that actually works for gameplay. When we think of VR on PlayStation, we immediately want the best, cutting-edge games operating in both 2D and VR modes - we want to buy the next Killzone knowing that it plays fine in the lounge and offers a state-of-the-art VR experience. For that dream to become reality, game developers will need to effectively design significant portions of the game twice, to ensure the best possible experience in both modes. Moving beyond the game design, QA will need to be carried out twice, too, and performance optimisation takes on a whole new level.

All of which leads us on to the question of frame-rate. Similar to image quality, the requirements for good VR are far more stringent than the standard 30fps console game. Low latency and high frame-rates are a must for this kind of experience - a tough nut to crack when the console standard remains at 30fps. Eurogamer's man at GDC, Martin Robinson, asked the Sony team how we can expect to see a 30fps game run at 60fps for VR, and it seems that they are pinning their hopes on expensive post-process effects - depth of field, for example - being pared back or removed completely.

"Rendering a stereo 3D image won't come cheap, even for the PS4's meaty graphics hardware, but the the VR set-up chosen won't be as taxing as 3D was for PlayStation 3."

In truth, many effects like this shouldn't be required in a VR environment - the eye will naturally refocus and provide its own depth of field, for example, while motion blur is far less of a requirement in stereoscopy, especially if the game runs at 60fps. In other cases, the trade will be simple - reduced image quality will be more than offset by the remarkable immersion VR offers. However, we still find it quite unlikely that paring back effects will be a cure-all for most games. It does nothing to address the likely event of a CPU-bound title, for example - only reducing the complexity of the game simulation can do that, and we're not sure that's a trade developers will want to make.

Certainly, a 30fps VR experience would be pretty grim based on our experience with the Oculus Rift. Even at 60Hz, there are reports from GDC of obvious motion blur. Moving from LCD to an OLED panel will help (and this is what Sony is planning), but not so much if the game is running at a lower frame-rate to begin with. More challenging is that absolute consistency in gaming performance is the exception rather than the norm. Variable frame-rates are a killer for good VR gameplay, which requires a far more stringent approach to image composition and refresh to keep you immersed in the experience.

In short, the potential for Project Morpheus is mouth-watering, but the challenges facing developers are substantial - Sony itself acknowledges that the highest-possible frame-rates and the lowest-possible latency are two key elements behind a strong VR game, and both of these are not exactly part and parcel of the typical triple-A console game right now.

All of which sets up an interesting variation in approach between Project Morpheus and Oculus Rift on PC. Palmer Luckey's outfit will be banking on the relentless pace of technological advancement in combination with the user choosing their own compromises, in order to get the best level of VR performance. Morpheus relies upon a more modest, but fixed platform, with the developers firmly in control of what you see and how well it runs. It's too early to see just how well Morpheus will pan out - but what's clear from the Sony presentation is that the firm is not really any further advanced in its work than Oculus. Quite apart from the lack of final production hardware, this is borne out by the lack of any actual examples of first-party software ready for showtime, not even the oft-rumoured DriveClub. The two games on display at GDC - Eve: Valkyrie and Thief - are both running on PC hardware.

It's early days, then, but truly exciting stuff. By the time Morpheus ships, we can reasonably expect PS4 to have sold tens of millions of units, with a mainstream reach that can only benefit the take-up of VR gaming as a whole, be it on computer or console. While Oculus has the benefit of an open platform available to all, Sony arguably has the advantage in terms of a carefully curated ecosystem - and a superb controller of choice in the form of the hitherto overlooked PlayStation Move. Getting everything lined up perfectly will be challenging, but Project Morpheus' introduction at GDC is a strong start.