Face-Off: Watch Dogs

Hack once again with the ill behaviour.

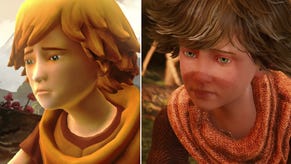

Booting up Watch Dogs on Xbox One directly after a PlayStation 4 play session is a surprisingly pleasant experience. 792p? Really? The introductory engine-driven cut-scenes - along with many other in-engine sequences - are a remarkably close match, not only with the PS4 version, but with the PC release running at full 1080p. Moving into gameplay, the difference becomes more evident, but one thing to make clear from the off is that the emphasis in Watch Dogs' presentation is on lighting and effects and how they interact with materials, and this kind of emphasis remains flattering despite the difference in resolution. Titanfall - another 792p title - didn't compare well to its higher-resolution equivalent, but Watch Dogs on Xbox One gets away with it.

If there's one word to sum up the advantage that PS4 Watch Dogs has over its Xbox One equivalent, it's refinement. Reduced aliasing here, less frequent screen-tear there - the impression you get is of a game that fundamentally offers the same package but feels a little more polished and solid on the Sony platform. Obviously, given the choice we'd take the extra refinement, but Xbox One owners can still buy Watch Dogs safe in the knowledge that Ubisoft Montreal's gameplay vision is delivered intact.

On PC, the story isn't quite so rosy. Despite the release of drivers from AMD and Nvidia apparently optimised for the game, we cannot find any way of playing this game at maximum settings without some off-putting performance issues. We've tried GTX 780s in SLI, Radeon R9 290X, GTX 780 Ti and even the 6GB GTX Titan, all of them backed up by a six-core Intel i7 3930K - a level of CPU power that significantly exceeds the recommended spec. In all cases, there are frame-rate and consistency issues that need to be resolved, and a particular rethink is required in the way that AMD graphics hardware handles this game.

Let's kick off with the comparison assets then. We're running the PS4 and Xbox One captured at 1080p as per the norm, while our PC is set to the default ultra settings and capped at 30fps in order to mitigate some of the performance issues. Getting an exact match between all three platforms isn't easy - the main problem being the constantly adjusting time-of-day and weather patterns, which may explain some of the lighting differences you see between each version. In addition to the vids, there's the Watch Dogs comparison gallery too.

"Xbox One's 792p resolution didn't work out so well on Titanfall but it's a lot kinder to Watch Dogs' more filmic presentation."

Alternative comparisons:

In truth, our overall conclusions on image quality haven't significantly changed since we posted our initial Watch Dogs performance analysis on Wednesday. The resolution difference between Xbox One, PS4 and our chosen PC setting (in this case, native 1080p) isn't really an issue during cut-scenes, but is more keenly felt during gameplay - especially on objects highlighted by the strobing white hacking overlay. Some HUD elements actually appear to be anti-aliased as part of the console post-process, so they can look a touch rougher on Xbox One, but this isn't really a massive issue.

Shadows are reworked on console - rougher around the edges on PS4 compared to PC, and with a lower resolution on Xbox One - but the differences are only really noticeable at short range, most especially during cut-scenes. In-game, shadow quality on both versions is clearly different, but neither feels particularly out of place. The major difference is in comparison to the PC version, where the definition and sheer volume of shadows really is on another level on 'ultra' settings.

Ambient occlusion is probably the next most noticeable difference, especially in the rather stark midday sunlit environments, where it comes into its own in adding depth to the scene. MHBAO is Ubisoft's in-house solution, described by Nvidia as a "half-resolution, console quality... technique" and seems to be in place on both PS4 and Xbox One, and it's also the standard solution for PC out of the box. However, the strength of the effect is significantly lowered on the Microsoft console, reducing the impact of the effect somewhat. PC owners get the real benefit with the inclusion of Nvidia's HBAO+, which luckily works on any graphics card. In its high setting, there's an almost night-and-day difference and we highly recommend enabling this. There is a GPU hit, but despite its Nvidia origins, it seems to be vendor-agnostic in terms of performance.

Elsewhere, we seem to be looking at parity between Xbox One and PS4 - which is curious bearing in mind the discovery of an internal engine XML document that suggests Xbox One-specific settings for environment, vegetation and geometry quality.

Try as we might, we couldn't find any substantial differences on Xbox One in the areas highlighted. It may simply be the case that bespoke code was required in those areas for the custom Xbox hardware, or perhaps the settings are remnants of code that no longer exists in the final game. Whatever the explanation, we find nothing in any of those areas likely to impact your enjoyment of the game. Certainly in other taxing areas - such as water simulation - we see parity between the two consoles. Only by moving onto PC with its ultra settings do we see any major improvement.

Watch Dogs' creative director Jonathan Morin says the console versions are a match for the PC version on high settings and it's fair to say that 'high' offers up the majority of the game's graphical features. However, on top of the much-improved water simulation, the ultra preset also offers improved dynamic reflections, and a longer view distance (though the effectiveness of this in city scenes is fairly limited). Ultra-quality settings are a luxury really, but they're nice refinements to have if the GPU budget is available

Aside from rendering quality presets, there's also support for various anti-aliasing techniques: FXAA, SMAA, the temporal SMAA used in the console versions, MSAA and TXAA. We used temporal SMAA for most of our comparison shots - it offers the best balance between quality and performance - but a special mention must go to the Nvidia-exclusive TXAA. It's not generally liked by many PC gamers owing to the blurring effect it has on high-frequency texture detail. However, it's a great match for Watch Dogs' more filmic style. The 2x option looks good, but 4x is better - albeit rather more taxing on GPU resources.

We've previously covered console performance and have little else to add - Watch Dogs is a pretty solid title on PS4, adopting a 30fps frame-rate cap and utilising adaptive v-sync when the engine fails to complete rendering the next frame in the allotted 33ms window. By and large, gameplay remains at the target frame-rate with few deviations beneath - the exception being packed scenes with lots of cars and explosions. Xbox One operates on much the same level - the difference being occasionally more noticeable screen-tear that occurs a little more frequently. Unlike the PS4 version, tearing can also infiltrate cut-scenes and general driving too, though it is rare.

The overall perception we get here is that Ubisoft targeted its optimisation efforts for PS4, scaled back the engine proportionately for Xbox One and wasn't quite so focused on hitting that 30fps target there. The overall effect is of a title that has a reassuring level of solidity on PS4, and while still fine overall, just feels a little more wobbly on the Microsoft console.

"Performance between the consoles is very similar indeed, but it's the PS4 that maintains its frame-rate target with more consistency."

In contrast, the PC version still needs some work. In terms of pure GPU workload, there's absolutely no reason why a good high-end card like the GTX 780 or R9 290 shouldn't offer up a locked 60fps at 1080p at the highest settings, while the GTX 770 and 280X should be equipped to hit the same level of performance on the high preset. Not only do we see significant performance drops on high-end hardware, Watch Dogs also represents the biggest difference we've seen frame-rate-wise between the two vendors' equivalent cards. (Indeed, the gulf is enough to have AMD crying foul, suggesting that Nvidia's GameWorks integration makes the game extremely hard to optimise around the Radeon hardware, something its competitor strenuously denies. Bearing in mind that this bespoke integration seems to amount to two anti-aliasing modes and ambient occlusion settings, we're inclined to give Nvidia the benefit of the doubt.)

Politics aside, what's very clear is that those pursuing 60fps gameplay are facing a challenge. Texture management seems to be the major culprit - streaming ultra-level assets into and out of RAM simply doesn't happen fast enough, resulting in noticeable stutter. It's not a RAM issue based on our testing - we replaced a 3GB GTX 780 Ti with a 6GB GTX Titan, producing the exact same result. Only by lowering texture quality from ultra to high did we improve matters, and even then the stutter wasn't completely eliminated. We also factored out graphical quality presets too by dropping down to medium settings - and yet still we saw dips in overall performance. Additionally, we were running the game from SSD on a SATA3 connection.

Swapping in an equivalent AMD card - the 4GB Radeon R9 290X - produced frankly shocking results. With v-sync engaged, ultra settings at both of the top-end texture settings produced an experience that could rarely exceed 30fps. Only by dropping down to medium could we break the 33ms render-time limit consistently, and even then, the stuttering mess we saw as a result simply wasn't worth the effort. Frame-rates would rise with v-sync turned off of course, but even then, benchmarks published elsewhere suggest a game that simply isn't optimised effectively for AMD hardware.

"We had genuine problems getting consistent frame-rates from the PC version of the game - stutter is a real problem right now."

Clearly something's up. Texture streaming to video RAM seems to require some attention, while the ineffectual Radeon performance seems to be entirely at odds with the same architecture's respectable showing on PS4. We know that Ubisoft is working on improvements, but it's clear that there's a lot to do. In the here and now, Nvidia owners get a better deal - reducing texture levels to the high preset doesn't unduly impact the visual quality of the game and stuttering is mostly confined to driving through the city at speed. The issue is far less pronounced during more sedate gameplay, and missions seem to be mostly fine.

Thus far, we've discussed high-end hardware in order to illustrate that the problems are deep-seated and that you can't buy your way out of the situation. However, on Nvidia GTX 780-class hardware, you can at least get something approaching 1080p60 on the highest quality levels with v-sync engaged. We also tried the GTX 760 and found that frame-rate varied uncomfortably around 30-40fps, and found that pursuing improved visuals at a locked 30fps was a better option than attempting to hit 60fps. Here we could have ultra-level presets with temporal SMAA at 1080p. Dropping down to the GTX 750 Ti gave us the same experience at the high quality level - effectively a match for PS4, but with a full 1080p resolution. Across the three cards we were reminded very much of Assassin's Creed 4 with v-sync engaged, where GPU power seemed to favour improved visuals rather than higher frame-rates.

One element of the PC version that does deserve praise is the inclusion of what Ubisoft Montreal calls a two-frame v-sync. Assuming you have a 60Hz display, this is effectively a 30fps cap, with proper frame-pacing (that is, a cadence of a unique frame followed by a duplicate). You're limiting your maximum performance level of course, but there's a reason why most console developers cap at 30fps: it ensures a consistent gameplay experience, and also introduces a lot of breathing room for the CPU; completing the simulation and draw calls in 33ms is significantly easier than the 16ms required for a 60fps experience.

Watch Dogs: the Digital Foundry verdict

Watch Dogs on PC should be the best version of the game, and yet somehow we find ourselves on shaky ground. Watch Dogs' graphics technical director Sebastien Viard points to the lack of unified RAM as the main reason behind the difficulties, and that Ubisoft Montreal is working to resolve the PC issues, but as of this writing, you may find that hitting a consistent 1080p60 even on a high-power games computer just isn't possible.

Bearing in mind the high demands for CPU power (the game recognises eight cores but makes use of six extensively) combined with the currently dodgy GPU situation, re-adjusting your expectations for 1080p30 gameplay and ramping up the graphics quality presets instead may be the better strategy. Just stay well away from the ultra quality textures for the time being.

On the console front, it's all too easy to recommend Watch Dogs on PS4 over its Xbox One equivalent: performance is more consistent and there's obviously the resolution boost to factor in as well. However, for the vast majority of the run of play, the two versions play in exactly the same way, and in motion the worrying notion of a significantly sub-native 792p rendering resolution looks nowhere near as bad as the raw numbers suggest.

The filmic approach to effects work in combination with an aesthetic that eschews jagged edges and intricate texture detail produces a game that presents itself in a mostly similar manner whether you're gaming at 792p, 900p or indeed 1080p. Console-wise, we feel confident in recommending both Xbox One and PS4 versions, but go for the latter if you have your choice of consoles: it's effectively an additional sheen of polish at no extra cost.