The Making of PlayStation 3D

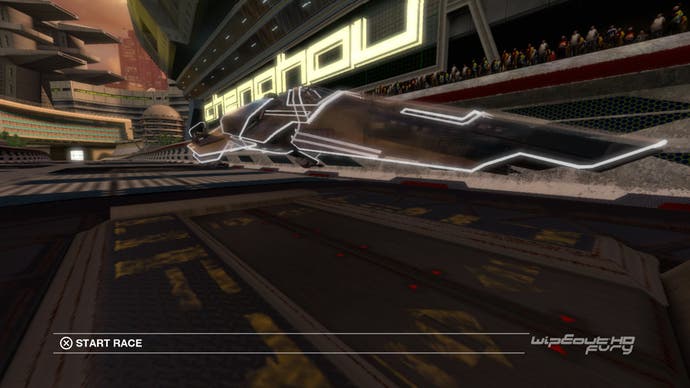

How WipEout HD and MotorStorm gained an extra dimension.

Last week Sony revealed that it is due to launch its new range of 3DTVs with a small range of stereoscopic-enabled PlayStation 3 titles. PAIN, Super Stardust HD and WipEout HD get the full treatment, while a single-level demo of MotorStorm: Pacific Rift is also in the offing. All will be downloadable from the PlayStation Store.

Regular readers of Digital Foundry will know that we're strong advocates of the technology, and that we reckon that sooner or later it's going to become an integral part of the gaming experience, most likely in latter-generation consoles when the displays themselves have matured. In a sense, what we're seeing at the moment is "baby steps" - a slow, faltering beginning for what well be one of the most important advances in screen technology since progressive scan.

At GDC 2010, we caught up with SCEE's stereoscopic team to hear their presentation on the implementation of 3D within current generation video games. The brief given to senior programmer Ian Bickerstaff and senior development manager Simon Benson was simple: to introduce the tech to games developers, explain the basics, reveal the advantages to gameplay and also to address the technical challenges inherent in effectively doubling your pixel throughput in order to provide discrete images for each eye.

"We have a simple three-step implementation process for making games in 3D," says Ian Bickerstaff. "Step one is to create two images. The PS3 has two 1280x720 buffers, in a top/bottom arrangement, with a 30-pixel gap between them using for video timing purposes. It's the left eye image at the top and the right at the bottom."

"The images are automatically converted to HDMI 3D output at 59.94Hz but you can use any frame-rate you want as long as you synchronise to the vertical refresh. That's really important because frame-tearing looks really bad in 3D; the tear will be in one image and not the other, so it's much worse than normal frame tearing."

The current-generation consoles mostly operate at 30FPS already, often with v-sync disengaging when frame-rate drops below that in order to retain the most crisp response and the most fluid visual experience. The Sony team advocates that the game should be v-synced at all times - a stiff challenge bearing in mind that two images need to be created.

"Inevitably there are problems in achieving that performance, hardware upscaling is available and actually the good news is that upscaled 3D images look a lot better than upscaled 2D images," explains Bickerstaff.

"It's the way the brain perceives the world. But if you're going to do that, you need really good anti-aliasing. If in doubt, it's better to have low-resolution images with great anti-aliasing than higher-resolution images with a lot of scintillating pixels going on." With the setup in place for generating the two discrete images, it's time to begin the process of generating the stereoscopic 3D effect, and that begins with the introduction of depth to the scene.

"Step two is to apply the convergence to define the maximum depth of the image, the maximum positive parallax," Bickerstaff says. "It's a 2D X-axis translation in screen-space and we move the left image to the left and the right image to the right. For our games we've used a 1/30th screen width as the default parallax. You need to take care that all of this is applied to every element of your rendering pipeline. If you've got reflections in water - things like that - make sure the shift happens to all the elements. Reflections will need to be calculated for both eyes."

The final step is fairly straightforward.

"What we've got now is an image that is flat but has depth into the screen. Now we're ready for step three which is to apply the inter-axial, to move the cameras apart, to create the final 3D image."

This is a significant amount of additional computational work to add to a game. The impact on performance can be mitigated if the base engine itself is designed for 3D, but clearly all of the launch titles for the new range of displays are adapted from existed code. The question is, how did they do that? It's perhaps no mistake that two of the titles for the 3D launch originally ran with 1080p support, suggesting some pixel-processing overhead for the generation of two 720p images.

"WipEout HD was originally 1080p at 60Hz: obviously a good foundation to start from. Making the 3D build of this we had to go for two 720p images," says Simon Benson.

"The advantage there is that that's less than 1080p, so we're all right there pixel-count wise: we've got some to spare, we're asking less in terms of pixel processing. But we were fairly geometry bound on WipEout HD. Because it was 60Hz we could just drop to 30Hz and in actual fact, that was it. There was no more work to do. That worked. It took very little time getting the game into 3D; there were very few problems with WipEout."