GeForce GRID: Can Cloud Gaming Match Console Performance?

Digital Foundry on NVIDIA's new tech, plus analysis of the latest Sony rumours.

The gaming world was a much simpler place back in 2009 when cloud-based gameplay streaming couldn't possibly work - at least not to anywhere near the degree of the claims being made at the time. And yet, despite falling short of the local experience and indeed the scant quoted metrics on performance, OnLive could be played. It was sub-optimal in many ways, but it was playable. It delivered a viable first-gen end product which was ripe for improvement, and just three years later we are seeing workable solutions being introduced that could change everything.

At the recent GPU Technology Conference, NVIDIA set out to do exactly that with the unveiling of its new GeForce GRID, an important innovation that potentially solves a lot of problems, both client and server side. Up until now, it's believed that cloud gaming has been achieved by connecting the user to a single PC inside the datacentre with its own discrete GPU. This is hardly power-efficient and it's also very expensive - however, it was also necessary, because graphics cores could not be virtualised across several users in the same way that CPUs have been for some time now. GRID is perhaps the turning point, offering full GPU virtualisation with a power budget of 75W per user. This means that servers can be smaller, cheaper, easier to cool and use less energy.

GRID also targets the quality of the client-side experience, too, in that NVIDIA believes it has made key in-roads in tackling latency, the single biggest barrier to cloud gaming's success. So confident is the company of its technology that it has even committed to actual detailed metrics. So let's have a quick look at the gameplay streaming world as viewed by NVIDIA.

"GeForce GRID is all about minimising server and encoding latency, bringing the Cloud closer to console levels of input lag."

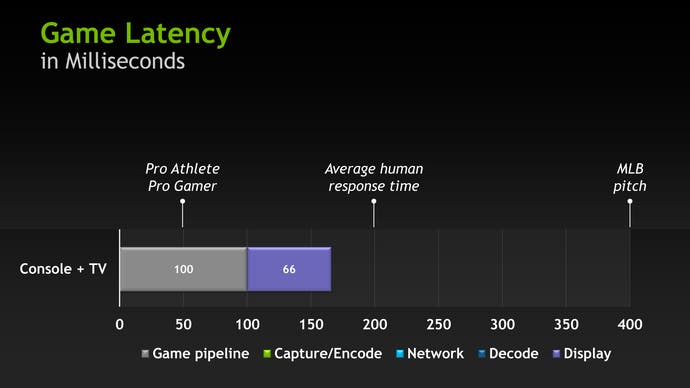

The slide on the left, seeking to put latency into context, is perhaps a bit of a red herring on first reading, certainly for a core gamer - perhaps for any console owner. The difference in response between Modern Warfare 3 and virtually any 30FPS shooter is obvious and is a defining factor in why it is the top-selling console shooter. However, there does seem to be a perceptual threshold at which latency actively impedes gameplay or simply doesn't "feel" right - hence GTA4 feeling muggy to control, and Killzone 2 attracting so much criticism for its controls (fixed in the sequel). And we'd concur that this does seem to be around the 200ms mark, factoring in input plus display lag.

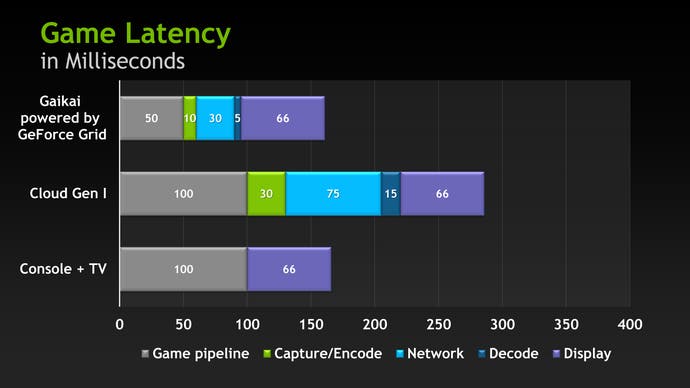

The slide on the right is where things get interesting. The bottom "console plus TV" metric is actually pretty optimistic for standard 30FPS console gameplay (116ms to 133ms is closer to the console norm based on our measurements), but, on the flipside, the 66ms display lag feels a touch over-inflated. The "Cloud Gen I" bar tallies with our OnLive experiences - or rather, OnLive operating generally below par. At its best, and factoring in NVIDIA's 66ms display lag, it would actually be closer to 216ms rather than the indicated 283ms, and just a frame or two away from typical local console lag in a 30FPS game.

The top bar is NVIDIA's stated aim for GeForce GRID - a touch under 150ms, including display latency. Now, that's an ambitious target and there are elements in the breakdown that don't quite make sense to us, but key elements look plausible. The big savings appear to be reserved for network traffic and the capture/encode process. The first element of this equation most likely refers to Gaikai's strategy for more locally placed servers compared to OnLive's smaller number of larger datacentres. The capture/encode latency is the interesting part - NVIDIA's big idea is to use low-latency, fast-read framebuffers linked directly to an onboard compressor.

Where we are a little unclear is on the 5ms video decode, down from the 15ms of "Cloud Gen I" - a useful 10ms saving. We are aware that Gaikai's association with LG for direct integration in its Smart TVs has included an aggressive evaluation of the internals aimed at reducing latency, both in decoding the image data but also in scan-out to the screen itself, which may account for this, but it is difficult to believe that this 10ms boost would apply to all devices.

Manufacturer-supplied benchmarks always need to be treated with caution and there is a suspicion here that best-case scenario is being compared to worst-case scenario on some elements, but, regardless, it's clear which areas have been targeted by NVIDIA for latency reduction.

"Convenience has won out over quality in the audio and movie markets - there is no reason not to expect gaming to follow suit in the fullness of time."

Can Cloud Catch Up With Console?

There are three major factors at play here: base image quality, video encoding quality and input lag. Our recent OnLive vs. Gaikai Face-Off suggests that David Perry's outfit has made serious strides in improving the first two elements in comparison to OnLive (though new server tech is now rolling out offering improved base image) but in truth, we will always see a difference between pure local imagery and compressed video. This is the fundamental trade-off between quality and convenience - exactly the same thing that has defined MP3 vs. CD and Netflix vs. Blu-ray.

In the here and now, the uncompressed 24-bit RGB 720p image that is transmitted to your screen from your console uses around 2.6MB of bandwidth, without factoring in audio. The cloud image is firstly downscaled to a different pixel format (YUV 4:2:0, which uses about half the bandwidth) but is then subject to video compression. OnLive only has around 11K per frame, including audio. In its current form, Gaikai runs at around half the frame-rate, so available bandwidth budget doubles, but there is still an enormous level of compression being introduced. Fast-moving action scenes will always suffer from noticeable macro-blocking unless more data is lavished on the image.

As bandwidth increases, so does image quality - but there is a point where the law of diminishing returns kicks in. At its current 5mbps level, we are definitely constricted by the lack of video data, but for 720p at least we do not require a quantum leap in bandwidth to get close-to-pristine video. To show what we need, here we encoded Soul Calibur 5 using the same x264 codec adopted by Gaikai, using a lot of guesswork about the actual settings they would use. It shouldn't be taken as an example of Cloud encoding, but it does show that fundamentally we reach a point where large increases in bandwidth are not matched in terms of image quality.

"Improved broadband infrastructure will eventually address the image quality issue, and we fully expect cloud to come into its own in the fibre-optic era."

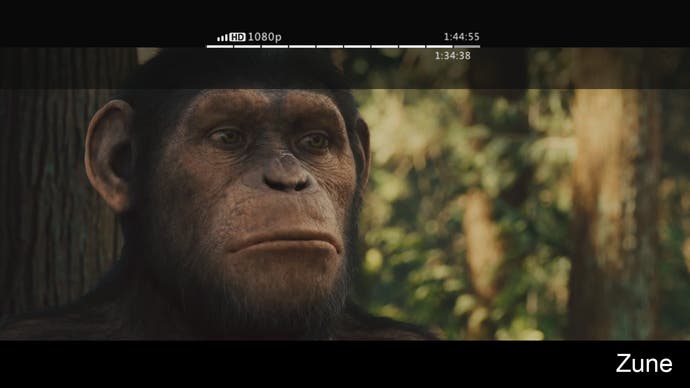

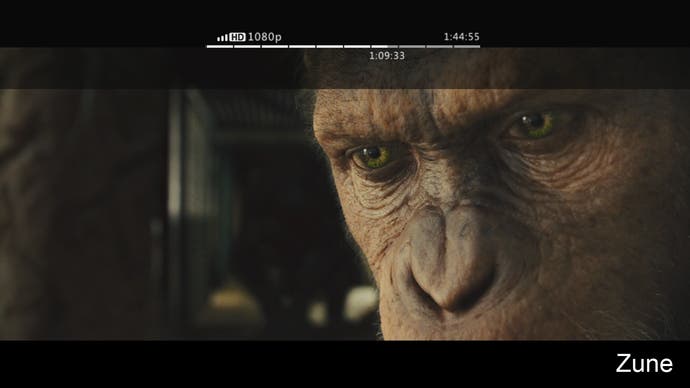

Moving to 10mbps will see a substantial increase in picture quality, but at 20mbps we have to consider how much we actually gain by increasing bandwidth. To offer a comparison, Blu-ray movies offer between 5-10 times the raw video data compared to streaming HD alternatives, but only the most ardent of purists would see anything more than a 2-3x increase in quality, as the Planet of the Apes shots above should demonstrate. With fibre-optic connections now becoming more commonplace, offering a baseline 25mbps (more often closer to 40mbps), it's safe to say that concerns about Cloud gaming image quality will be addressed over the course of time by improvements in infrastructure. It still won't match local quality but we would have reached that Netflix/MP3 point where the hit to fidelity probably won't matter to a mass audience.

Local vs. Cloud - The Latency Balance

But what of NVIDIA's claims that the Cloud can consistently match console latencies? Until we get hands on with a GRID server (or, rather, a client connected to one) we have to reserve judgment. But there is a good chance that we - and indeed you - may have already been experimenting with early GRID technology, and the results are promising. We understand from impeccable sources that Gaikai has been using what have been described to us as "a Fermi version of GeForce GRID that contains some of the fast-streaming technology" which precedes a full Kepler rollout in Q4 of this year.

And that may explain this particular moment in history: cloud input latency matching its console equivalent. Bulletstorm running on Xbox 360, with the PC game streamed over Gaikai on a UK ADSL connection. Now, input lag on this game isn't exactly lightning fast, even running locally. We saw a mixture of 116ms and 133ms readings across a run of play. And we should also add a caveat that that the Gaikai version's response is not as consistent as it is on the Xbox 360 and network traffic conditions do seem to play a big part in that. However, the fact that we have a close match at all is a major technological achievement and can only be a good foundation to build upon.

"Bulletstorm on Gaikai can match input lag on the Xbox 360 version. What is currently the exception could well become the norm in the fullness of time."

So how is this possible? The fundamental principle of cloud gameplay is that - in very basic terms - if you run games at 60FPS rather than the console-standard 30, input lag drops significantly, usually by around 50ms. Gaikai and OnLive aim to use that time to capture and encode video and audio data, beam it across the internet and have the client decode it in the same time period, giving an equivalent experience.

Current cloud technologies usually overshoot the 50ms target by some margin, but in the Bulletstorm example above we have a shining moment where the technology seems to be hitting the target. It does not do so consistently (re-running the test on Friday night at 6.30pm saw lag increase by two frames, and other Gaikai titles we tried came in at over 200ms), but the fact we get there at all is a seriously impressive achievement. NVIDIA's aim seems to be all about increasing that 50ms window as much as possible and getting more value from the time available by centralising the capture/encode section of the process.

So if there is the potential for a console-style experience via the cloud, perhaps it is not surprising that rumours are starting to circulate about Sony offering streaming gameplay. Our belief is that next-gen console gaming is going to consist of both Sony and Microsoft offering parallel services: streaming on the one hand, and a costlier full-fat local option for the core.

In short, what happened with Blu-ray and Netflix is almost certain to be mirrored with video games once infrastructure and tech matures, but with the latest Sony rumours seemingly addressing current-gen and PS3 in particular (something that seems overly optimistic) this opens up some intriguing possibilities - and limitations.

Could Sony Adopt Cloud Technology?

On a higher strategic level, offering a cloud service makes perfect sense for a platform holder like Sony. It desperately needs to start making money from its TV business and the cost of the client gaming hardware itself transforms from an expensive console that loses money with each unit sold into an integrated decoder chip that would already be inside the display itself, or at worst a decoder box like the excellent OnLive microconsole. Core gamers are likely to continue buying traditional consoles for the optimal experience and would pay a premium to do so.

But is the cloud ready for the PlayStation generation? Could we see PS3 gameplay streamed to set-top boxes and Smart TVs in the very near future?

Even assuming that the infrastructure holds up to the load induced by millions of gamers, the notion of directly streaming gameplay from server-side consoles clearly presents issues. Assuming there are banks of PlayStation 3s inside datacentres, we are left with the same lag-inducing capture/encode issue that GRID seeks to mitigate. Adding to the problem would be the fact that a key latency-saving technique adopted by Gaikai and OnLive - running games at 60Hz rather than 30Hz and pocketing the saving for capture/encoding/decoding/transmission - wouldn't be possible here. In terms of lag it sounds rather like something approaching the worst-case latency scenario envisaged by NVIDIA.

However, what many people forget is that PlayStation 3 already streams gameplay over IP and, in some respects, cloud is a natural extension of that. Hacks showing PlayStation Vita running a large library of PS3 titles via Remote Play demonstrate that the fundamental technology is already there. Resolution is a paltry 480x272, but Sony already has 480p streaming up and running behind the scenes on selected titles such as Killzone 3, and in theory, 720p could be 'doable'.

How does it work? Sony has libraries that encode the framebuffer into video - it's a process that is hived off to SPU. Similar tech is used for titles like HAWX 2 and Just Cause 2 for saving off gameplay captures to HDD or for YouTube upload. A single SPU is capable of encoding 720p at 30FPS at 5mbps, so it's 'just' a matter of finding the CPU resources.

Conceivably, this is possible without impacting resources available to game developers. Let's not forget that every PS3 already has a deactivated SPU - eight are incorporated into the silicon at Ken Kutaragi's insistence, but only seven are active. Back in the early days of PS3 production, these were disabled to improve chip yields, but we can safely assume that on the current, mature 45nm production process, that untouched SPU would be fully operational and simply turned off. So, custom PS3 datacentre servers need not be that expensive, then, and could use the existing chip design.

However, a look at HAWX 2's streaming efforts suggests that Sony would need to do a lot of work on improving image quality. What we have now isn't bad at all for 30ms of SPU time, but the encoder could only start its job once the frame has rendered, so we're looking at an additional 30ms of lag before the frame is even transmitted over the internet. Hardly ideal.

"Sony was streaming gameplay over IP five years ago. The PS3 features SPU libraries for real-time video compression used in Remote Play. Cloud is the natural evolution."

Realistically, the notion of PS3 servers does seem a little far-fetched and even with "onboard" encoding there are still question marks over performance. Theoretically there is nothing to stop third parties simply piggybacking onto existing PC servers at the cloud datacentres and using PC code to stream their games onto PS3, but the notion of Sony signing a deal to stream gameplay that locks out its own platform exclusives just seems fundamentally wrong.

That said, if the rumours of an AMD, x86-based PlayStation 4 turn out to be true, the deal starts to make a whole lot more sense: a GRID-style console variant may end up at datacentres, or else games could be coded for local and cloud (i.e. PC-based) SKUs. If there is anything to this cloud tie-up, we would expect it to be aimed squarely at Orbis/PS4, but it could also prove to be a seriously big deal for PlayStation Vita.

Sony's new handheld already hosts a more-than-capable media decoder, making it eminently suitable for cloud gameplay and it also means that our proposal of Xperia Play with OnLive as a viable next-gen handheld experience suddenly takes on an interesting new dimension. PlayStation-certified mobile devices suddenly become a lot more relevant in general as all of them have the required decoding hardware.

In the here and now though, for all the excitement of a major platform holder embracing cloud tech, there remain obvious issues in the quality of the overall cloud experience. The biggest is the sheer inconsistency of it all in terms of picture quality and response.

However, the fact is that three years on from the first OnLive reveal, we are still in first-generation territory. What the GeForce GRID presentation offers us is a frank assessment of all of cloud's shortcomings, but, crucially, it does seem to be proposing viable technological solutions to some of the challenges. Until we see some direct results of the hardware encoder built into the GRID GPU, we can't really comment about image quality (traditionally, hardware struggles to match software solutions), but the ideas surrounding latency reduction sound plausible. The question is just how quickly the surrounding infrastructure will improve in order to sustain a high-quality experience, 24/7...

.png?width=291&height=164&fit=crop&quality=80&format=jpg&auto=webp)