NVIDIA GeForce GTX 680 Review

Tomorrow's graphics tech today.

The era of the next-generation graphics core is finally upon us. NVIDIA claims that its new GTX 680 is the fastest and most power-efficient GPU ever made, offering significant performance improvements over its competition - and indeed its own range of current flagship products - while at the same time reducing the amount of juice it saps from the mains. It's a lofty claim but testing bears it out: NVIDIA's new "Kepler" architecture is truly something special, setting the stage for the next era in graphical performance.

Prior to the release of this new technology, recent GPU upgrades for PC haven't exactly shown enormous levels of improvement (indeed the Radeon 6x00 series cards were in some ways slower than their predecessors) and one of the main reasons behind this was the fact that both AMD and NVIDIA were only able to fabricate their silicon at 40nm. Moore's Law has historically been driven by lower fabrication processes - and the transition to 28nm is what will power next-gen visuals on both PC and console.

The GTX 680 offers approximately twice the performance per watt consumed compared to its illustrious predecessor, the GTX 580. Die-size drops from 520mm squared down to 295mm squared, yet the transistor count rises from 3bn up to 3.5bn. It features a mammoth 1536 CUDA cores, up from 512 (though the design is very different, so the cores are not directly comparable), while GDDR3 gets upgraded from 1.5GB to 2GB, with RAM speed now reaching 6GHz - a remarkable achievement. Also intriguing is that the memory bus is actually reduced from 384-bit in the GTX 580 down to 256-bit.

The gain in performance is also excellent - something we'll look at later on, but the basic facts speak for themselves. The GTX 680 is smaller, leaner, cooler (it really is very quiet indeed for a high-end GPU) and more efficient, and there's headroom left over in the technology for an even more monstrous processor - already rumours are circulating of a 2304 core, 3GB ultra high-end card, with the 384-bit bus restored and 3GB of GDDR5 RAM on tap. However, in the here and now, the GTX 680 is the new state-of-the-art.

"Moore's Law has historically been driven by lower fabrication processes - and the transition to 28nm is set to power next-gen visuals on both PC and the new wave of consoles."

Physical Form Factor

On first glance, there's little to differentiate the GTX 680 from any other enthusiast dual-slot graphics card. However, there are a number of changes and improvements over the old GTX 580 that are worthy of comment, translating into useful gameplay features. First up, the number of display outputs has been boosted: the old combo of dual DVIs with a mini HDMI output has been given the push in favour of two DVIs, a full-size HDMI socket plus a brand new DisplayPort output.

The GTX 680 is capable of running four displays simultaneously - the DVIs and DisplayPort can be used for triple-screen surround gaming in both 2D and 3D, while the final output can be used for monitoring background apps.

Another change comes from the power socket allocation. Top-end GPUs in recent times have required the use of both eight-pin and six-pin PCI Express power from the PSU. The GTX 680 harkens back to earlier NVIDIA graphics cards, only requiring two six-pin inputs, thanks to the drop in the chip TDP from 250W down to 195W. This reduction in power draw is such that NVIDIA is happy to recommend a 550W power supply for running a GTX 680 system.

This drive for efficiency yields dividends elsewhere. The old GTX 580 was a fairly quiet card even under load, but its replacement produces significantly less noise. The smaller, cooler chip is backed up by a triple heat-pipe design that is complemented by a heat sink with a custom fin stack "shaped for better air-flow", according to NVIDIA. Acoustic dampening materials are added to the fan itself to reduce noise still further.

Introducing GPU Boost

"The new GPU Boost technology dynamically overclocks the graphics card if the current game is not making the most of the default clock speed."

One of the most interesting advances made with the new Kepler architecture is the inclusion of an auto-overclocking mechanism, dubbed "GPU Boost". The idea behind this is pretty straightforward, working as a graphics core equivalent to the Turbo Boost technology added to recent Intel processors. Onboard monitors constantly look at the power draw on the card, and if the 195W TDP limit is not being attained, the GPU ups its clock-speed dynamically.

Some applications will see no increase in speed at all - NVIDIA cites the 3DMark 11 benchmark as one of these programs - but others can see a very useful increase. Take Battlefield 3, for example - the very definition of a state-of-the-art PC game. The core clock, which runs at 1005MHz, boosts up to 1150MHz during BF3 gameplay - a 10 per cent overclock that occurs automatically without any user intervention.

Despite this, the user is still able to overclock the GTX 680 and by using the EVGA Precision tool, one slider controls everything: when you adjust the core clock, the GPU boost clock adjusts with it. In NVIDIA's presentation, Battlefield 3 seemed to reach 1250MHz with no real effort whatsoever. NVIDIA describes the GTX 680 as "a monster overclocker". The presentations certainly looked impressive, and it'll be interesting to see how the enthusiast sites get on with extracting further potential from the card.

GTX 680: Performance Analysis

PC benchmarking sites have refined analysis of CPU and GPU technologies to a fine art, thanks in no small part to the FRAPS benchmarking tool, which has the ability to measure the time taken to render every frame - even if those frames don't end up being fully rendered on-screen. Most displays update at 60Hz, but these days even mid-range graphics cards can run high-end titles at frame-rates that far exceed this. The result is what we call a "double tear" - screen-tear is evident, but it's not because of lower frame-rates as we might see on console. It's actually because too much visual data is being processed to be rendered on-screen. When we're buying an enthusiast-level graphics card, this kind of off-putting visual artifact isn't really what we want.

So in assessing the performance of the GTX 680, we wanted to cover a couple of bases. We wanted to get an idea of maximum performance by using some established benchmarks, but we also wanted to show how the new Kepler GPU runs with the kinds of settings we actually use ourselves when gaming. On these experiments, our target is a 1080p resolution, with maximum settings engaged on a range of truly testing titles, and we wanted v-sync engaged in order to completely remove the off-putting tearing.

"NVIDIA's own comparisons with AMD's top-end Radeon HD 7970 seem to show increases in performance between 15 to 25 per cent, up to a remarkable 43 per cent boost for Skyrim."

Our test station is the same PC that's often used in our Face-Off articles: it has a Core i7 920 quad core CPU, overclocked from the base 2.67GHz to a rock-solid 3.33GHz. This is backed by 9GB of 1600MHz DDR3 memory, with games running from a 1TB Samsung F1 hard drive. The 64-bit version of Windows 7 runs the show. This unit features a 1.5GB NVIDIA GTX 580, which we'll be using for comparison with its successor. We'd have loved to have used the AMD Radeon 7970 instead, but alas we did not have this card available. For the record, NVIDIA's own comparisons with the 7970 are quite eye-opening to the extent that the firm is claiming that there's a 43 per cent performance boost to Skyrim at 1080p on ultra settings, though other benchmarks seem to average between 15 to 25 per cent.

For an independent comparison across a range of GPUs, you can't go wrong with Anandtech's exhaustive analysis which is pro-NVIDIA, while on the flipside, TechReport's assessment suggests that the 7970 may well be the marginally better buy. Hexus says that the GTX 680 is faster and quieter than a price-comparable 7970, a sentiment backed up by HardOCP. Finally, Tom's Hardware disputes the 2x performance per watt claim made by NVIDIA, but says that "GeForce GTX 680 is now the fastest single-GPU graphics card, and not by a margin that leaves room to hem or haw".

Benchmarks

Our own benchmarks follow a pretty standard form. To begin with, we ran 3D Mark 11 at the standard 720p Performance and 1080p Extreme settings, first with the GTX 580 using the latest drivers, then swapping in the new GTX 680. On the 720p test we saw an immediate boost in the order of 35 per cent, and the gulf grew bigger still when it came to tackling the 1080p Extreme tests. Bearing in mind the reduction in power consumption and all the benefits that come with it, this is pretty impressive stuff.

Futuremark 3DMark 11: Tested on standard Performance and Extreme settings.

| GTX 580 (Performance) | GTX 680 (Performance) | GTX 580 (Extreme) | GTX 680 (Extreme) | |

|---|---|---|---|---|

| Graphics Score | 6219 | 9100 | 1914 | 2875 |

| Physics Score | 6559 | 7436 | 6142 | 7433 |

| Combined Score | 6181 | 6718 | 2555 | 3446 |

| 3DMark 11 Score | P6263 | P8512 | X2112 | X3117 |

Moving on to the Unigine Heaven test, with settings at the absolute maximum, we see a 32 per cent increase in frame-rates and overall score.

Unigine Heaven Benchmark 3.0 Basic: DX11, 1920x1080, 8xAA, high shaders, high textures, trilinear filtering, anisotropy x16, occlusion enabled, refraction enabled, volumetric enabled, tessellation extreme.

| GTX 580 | GTX 680 | |

|---|---|---|

| Average FPS | 34.9 | 46.1 |

| Min FPS | 17.5 | 22.6 |

| Max FPS | 91.4 | 116.1 |

| Unigine Score | 880 | 1162 |

"On all but one of the benchmarking tests that pit the GTX 580 against its successor, we see performance boosts in excess of 30 per cent."

The only benchmark to underwhelm is the built-in Frontline performance analysis tool in 4A Games' Metro 2033. Here, the GTX 580 appeared to match the GTX 680 almost point for point, with just a miniscule lead enjoyed by the new NVIDIA hardware.

Metro 2033 Frontline Benchmark: DX11, 1920x1080, very high quality, 4x MSAA, AF 4x anisotropy, advanced PhysX enabled, tessellation Enabled, depth-of-field enabled.

| GTX 580 | GTX 680 | |

|---|---|---|

| Average FPS | 27.03 | 27.80 |

| Min FPS | 9.86 | 10.21 |

| Max FPS | 53.71 | 54.36 |

Moving onto the benchmark test contained in Batman: Arkham City, we ramped up all settings to maximum, engaged the computationally expensive DX11 features and ran the benchmark twice - firstly with no PhysX features enabled, and secondly at the "normal" setting. We did briefly experiment with the maximum physics acceleration but performance was enormously impacted on both GPUs, so we left that alone. With PhysX active we see a 20 per cent improvement over the GTX 580, rising to 35 per cent when hardware physics is completely disabled.

Batman: Arkham City Benchmark: DX11, 1920x1080, 8x MSAA, high tessellation, very high detail, all other settings enabled.

| GTX 580 (PhysX normal) | GTX 680 (PhysX normal) | GTX 580 (PhysX off) | GTX 680 (PhysX off) | |

|---|---|---|---|---|

| Average FPS | 42 | 50 | 53 | 72 |

| Min FPS | 20 | 20 | 26 | 15 |

| Max FPS | 56 | 73 | 72 | 99 |

Gameplay Analysis

DirectX 11 has brought a new visual standard to video games but the requirements on GPU power for top-end features can be truly immense. Games like Crysis 2 and Battlefield 3 at ultra settings bring all but the most powerful graphics cores to their knees at our preferred 1080p resolution, necessitating some nipping and tucking at the graphical quality levels in order to get a more sustained performance level.

Crysis 2 really puts GPUs through the wringer: its DirectX 11 upgrade features tessellation and displacement mapping, parallax occlusion mapping, more realistic shadows (even particles get them now), bokeh depth-of-field and water rendering, and enhanced motion blur. Released alongside the DX11 patch was a 1.7GB high resolution texture pack and both upgrades in combination produce some incredible visual results. The difference compared to the console versions is remarkable - this is truly next-gen stuff.

Comparing the GTX 680 to its predecessor shows a good 20 per cent improvement in frame-rates when run on the exact same settings, and when rendering identical material. Remember that with v-sync engaged we are effectively limiting the 680 to a 60Hz refresh, even if it's capable of much more. The overall result is clearly noticeable during gameplay: faster refresh, more responsive. In the heat of the action it's far from the locked 60FPS we'd like, but the improvement over the best of the last gen is clearly worthwhile. That said, a look at the final clip - asynchronous gameplay from a heated firefight - demonstrates that multiple rendering effects in combination with Crysis 2's advanced physics can still pose serious issues, even on a top-end system like this.

"Crysis 2 on DX11 max settings exerts a monstrous amount of work onto the GPU, and the GTX 680 offers a clear boost in performance over its predecessor."

Designed from the ground up for DirectX 11, DICE's Battlefield 3 is another game that represents the current state-of-the-art in videogaming's visual arts - advanced deferred shading, texture streaming, order independent transparencies, tessellated landscapes, high dynamic range lighting... the list of DX11 features utilised is absolutely remarkable and it all translates into one of the most astounding visual showcases for the power of enthusiast PC hardware.

Ultra level settings in combination with 1080p resolutions and beyond can bring frame-rates crashing down, but the overall sense we get from the GTX 680 is very similar to the Crysis 2 experience. At times we see a good 20 per cent performance boost when rendering the exact same scenes, and while frame-rates are still compromised in the heat of the more intense fire-fights, the 680 clearly has a more consistent performance level overall.

The takeaway is fairly self-evident: a superb Battlefield 3 experience at 1080p can be enjoyed with both sets of NVIDIA hardware, but that extra 20 per cent of power is not to be sniffed at. Once settings have been tweaked, it's the 680 that will provide more visual features at frame-rates closer to that locked 60FPS.

"With anti-aliasing engaged, the original Crysis engine exerts much more pressure on the GPU than its DX11-equipped sequel. Not even the GTX 680 can handle it with any kind of consistent frame-rate."

Rounding off our performance tests we went back to the original Digital Foundry Grail Quest - running the original Crysis, or indeed its Warhead side-story, at 1080p60 with v-sync engaged. In truth, we'd given up on this some years ago - the game hails from an era where optimisation and multi-core support wasn't anywhere near as much of a priority for Crytek as it is now in its new guise as a cross-platform developer. But regardless, we were curious to see how the latest hardware could cope with the old game. We used the DirectX 9 renderer for this test, but we ran with 4x MSAA engaged (something we never dared dream of in our original tests) with all settings ramped up to the Enthusiast level.

The results are perhaps even more pronounced than they were in the Crysis 2 and Battlefield 3 tests in favour of the GTX 680, but still some way off the ideal 1080p60. In the final gameplay clip based on intense combat we see that frame-rates can dip below 30FPS on the GTX 580 while the new card keeps its head above the same threshold.

Adaptive V-Sync For Smoother Play

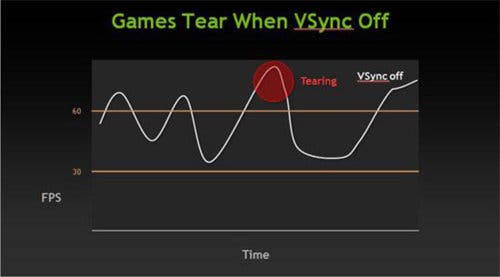

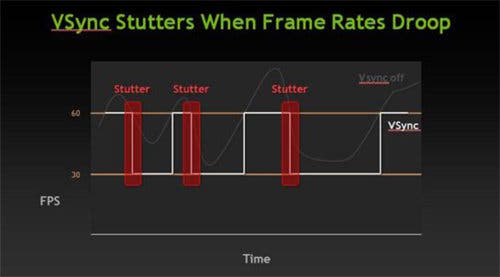

Our chosen technique in assessing gameplay performance may not go down well with some, and it does compromise performance to a certain degree. By engaging v-sync, we are limiting performance to 60 frames per second and by forcing the GPU to wait for the next screen refresh before beaming over a new frame, we are also stalling frame-rates too. But therein lies one of the problems with v-sync on PC gaming - the only options are available are "on" and "off", and each option presents some genuine issues when looking for a smoother, tear-free gameplay experience.

With v-sync turned off, screen-tear is a constant companion. If the game is running at less than 60 frames per second, tearing features "left-over" information from the previous frame. If the graphics card runs the game faster than 60FPS, we have what's known as a "double-tear" scenario - too many images being generated with the display not refreshing fast enough to display them.

With v-sync turned on, tearing is completely eliminated. The problem is that at 60Hz, the GPU must render a frame within a 16.67ms budget to meet the next screen refresh. If the frame is not generated in that very small time window, the system effectively waits until the display refreshes again. A smooth 60FPS effectively drops down to 30 until frame-rendering comes back into budget, introducing noticeable, obvious judder.

"The new Adaptive V-Sync tech aims to reduce screen-tear to a minimum, locking at 120, 60 or 30FPS and only tearing when dropping below the target frame-rate."

The solution is pretty obvious, and has been utilised on console games for a very long time: sync on 60Hz, but if the frame runs over budget, flip the framebuffer anyway. We've previously referred to it as a soft v-sync, Remedy calls it smart v-sync, and now it has a new name on its PC debut: adaptive v-sync. The same basic principles apply though: with adaptive v-sync you shoot for a 120, 60 or even 30FPS update, and introduce tearing if it falls below.

This is an approach that has long been advocated by John Carmack, who implemented it for the PC version of Rage (thereby matching the console versions), but found that the necessary API was not added to the AMD and NVIDIA drivers, meaning that users couldn't access it in the final game. With Adaptive V-Sync, it can be set on the control panel and engaged on any game. While it's only a feature of the GTX 680 pre-release driver we have, hopefully it will be widely deployed for older NVIDIA hardware too. If it can run on the PS3 and Xbox 360, it should in theory run on any hardware.

But does the PC version work? It definitely helps in reducing the stutter caused by v-sync, but it still requires the user to adjust settings so the game runs at 60Hz locked most of the time in order to be effective. Adaptive V-Sync should be viewed as a "safety net" for maintaining smooth performance on the odd occasions where frame-rate dips below 60.

Beyond the Silicon: New Anti-Aliasing Options

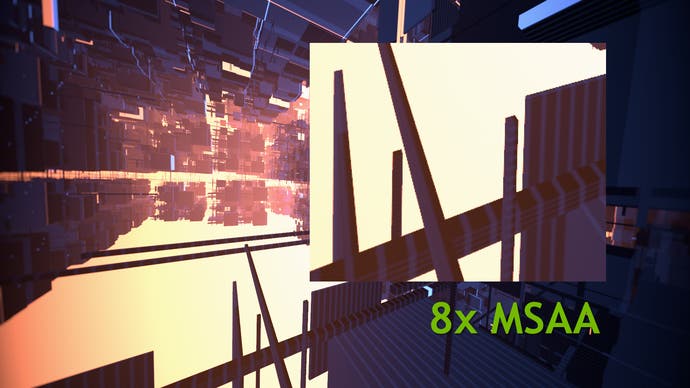

NVIDIA is keen to press home that the new Kepler offering is about more than just the silicon, impressive as it is. The company has some of the best minds in software engineering and has been at the forefront of advances in several fields of rendering - first and foremost, there's anti-aliasing.

The traditional solution for improved anti-aliasing performance has been to increase raw power and memory and let existing techniques in hardware multi-sampling anti-aliasing (MSAA) do the job. The problem is that the latest rendering technologies simply don't work very well with MSAA - deferred lighting solutions as seen in games including Dead Space 2 and Deus Ex: Human Revolution use an entirely different set-up that consumes memory on a much larger scale, meaning that the RAM simply isn't there for MSAA to be available for developers.

Digital Foundry has covered the ingenious solutions offered up by developers - the most common of which are MLAA and FXAA, which have been quickly taken up on console by game-makers eager to reap the rewards in image quality while freeing up the GPU and RAM resources previously sucked by MSAA.

"FXAA and the newly created TXAA look to overcome the incompatibilities between traditional MSAA and the latest deferred shading rendering techniques."

If you're looking to check out this Samaritan image with no anti-aliasing at all in order to compare with the images above, we've got that covered too.

FXAA is actually an NVIDIA innovation, rolled out as open source to game-makers at large, achieving a phenomenal level of take-up in less than a year. In its latest drivers, NVIDIA is making high quality FXAA a control panel feature, meaning excellent post-process anti-aliasing is now available for hundreds of games without the need for hacked "injectors". However, it seems that FXAA is just the beginning of the story. Now the engineers at NVIDIA have trumped it, with TXAA.

Introducing TXAA

TXAA, demonstrated running on the new Kepler architecture, has a multi-sampling component (equivalent to the cost of either 2x or 4x MSAA depending on the implementation chosen by developers), but uses it more smartly in combination with other forms of anti-aliasing. Elements of FXAA are combined with an optional temporal AA component - the kind of half-pixel offset jitter we've previously seen in the PC version of Crysis 2, and the results look first-class.

In NVIDIA's own demo, which presents a range of low and high contrast jaggies, the basic TXAA implementation appears to exceed the quality of 8x (and even 16x at times) MSAA while incurring a performance hit equivalent to 2x. The results are quite remarkable, easily handling the sub-pixel detailing that post-process AA has trouble with. Maybe the era of MSAA is finally reaching its conclusion, with "smart" technology finally providing better overall results than raw computational brute force.

A comparison image of the TXAA demo with no anti-aliasing active is also available for download. A good reference point for the shots above.

It's difficult to tell right now because all we've seen of TXAA is the NVIDIA demo in controlled conditions, and unfortunately it's not a feature that can be enabled via the control panel - developers will need to explicitly add support to their games.

However, bearing in mind the NVIDIA engineers' pedigree with anti-aliasing solutions we wouldn't be surprised if it does turn out to be the "next big thing". According to NVIDIA, TXAA is set to be included in the next-gen Unreal Engine 4, Crytek is also going to support it, plus we can expect it crop up in a number of titles with EVE Onlive and Borderlands 2 already confirmed.

PhysX Strikes Back

"The PhysX technology looks set to be given another push, with comprehensive support already implemented in Gearbox's highly anticipated Borderlands 2."

NVIDIA continues to refine its PhysX technology, which worked so well in enhancing titles like Batman: Arkham City. The processing power afforded by the Kepler architecture opens up some intriguing possibilities. At NVIDIA's press event we were treated to a demo of Borderlands 2 with PhysX enhancements including some impressive water simulation, enhanced (destructible!) cloth physics and volumetric smoke trails from rockets, which animated beautifully.

It has to be said that the game we saw was still looking pretty early and performance in this Borderlands 2 demo wasn't exactly exemplary, but the effects being demonstrated did look quite superb. PhysX isn't "free", incurring a GPU hit, but bearing in mind how much extra processing power is sapped by incremental effects, it may well be the case that dialling down other effects will be worth it in order to enjoy enhancements like this.

NVIDIA also demonstrated how PhysX hair and cloth simulation is being used even in the most unlikely of places. QQ Dance 2 is apparently immensely popular in China, and the game has hair and cloth simulation in place provided by PhysX.

NVIDIA GeForce GTX 680: The Digital Foundry Verdict

In terms of both benchmarks and the gameplay experience, the new GeForce GTX 680 does a great job in exceeding the performance of its predecessor, and should return benchmark honours back to NVIDIA after AMD's recent HD 7970 launch. However, what really impresses us with the new hardware is that the manufacturer hasn't just relied on the newer fabrication process to crank up its performance level. The 28nm tech is backed up by an innovative, power-efficient design that promises great things for future GPUs based on the same Kepler architecture.

The chances are that unless you're a die-hard PC enthusiast, the GTX 680 is probably too much for you: it's a high-end piece of kit with a £430 price point ($499 in the USA). But this is just the first product in what will be a full range encompassing a range of budgets, and all the signs are that the affordable products are going to be very special. At 295mm squared, the die-size of the top-end GTX 680 is significantly smaller than the 520mm squared of the 448 core GTX 560ti which currently costs around £200. Indeed, it's even smaller than 360mm squared GTX 560 (£130). What this means is that there's plenty of leeway for NVIDIA to produce some killer mid-range graphics cards at very keen price-points.

"While this top-end offering is too expensive for the average gamer, the strength of the underlying architecture speaks for itself and forthcoming mid-range cards should be special."

Power efficiency is also impressive. In our Alienware X51 review, we noted that the 160W limit on replacement graphics cards means that you'd need to be very careful in choosing a replacement GPU for the supplied GT 525 or GTX 555. With the GTX 680 maxing out at a 195W TDP, there shouldn't be any problems on less demanding mid-range cards working in that lovely Alienware chassis, or indeed in any small form factor PC, and the performance boost should be exemplary.

Kepler is also set to appear in a mobile package too. Indeed, the Acer Timeline Ultra M3, with a next-gen GT 640M chip plays Battlefield 3 on ultra settings and should be available by the end of the month (look out for a DF Hardware review soon). Now admittedly this is at the 1366x768 native resolution of the laptop and it's nowhere near 60FPS, but we've seen it running and it certainly looked playable enough. Tone that down to the high settings and we have the mouth-watering prospect of thin and light ultrabooks offering up a 720p experience that easily exceeds what's currently being offered by the Xbox 360 and PS3 - something we can't wait to try out.

The Kepler design also hints heavily at the kind of performance we might be seeing on next-gen consoles: it's almost certain that both PlayStation 4 and the new Xbox will feature 28nm graphics cores. At the Epic presentation at GDC 2012, Epic's Mark Rein hinted heavily that the kind of performance we see from the GTX 680 is what they would expect to see from the new wave of consoles from Sony and Microsoft. Now, the notion of sticking a 195W chip into a console doesn't sound particularly realistic, but a smaller chip with a similar design ethos tightly integrated into a fixed architecture? Now we're talking...