PlayStation 5 uncovered: the Mark Cerny tech deep dive

Architecting the next generation.

On March 18th, Sony finally broke cover with in-depth information on the technical make-up of PlayStation 5. Expanding significantly on previously discussed topics and revealing lots of new information on the system's core specifications, lead system architect Mark Cerny delivered a developer-centric presentation that laid out the core foundations of PlayStation 5: power, bandwidth, speed, and immersion. A couple of days prior to the talk going live, Digital Foundry spoke in depth with Cerny on the topics covered. Some of that discussion informed our initial coverage, but we have more information. A lot more.

But to be clear here, everything in this piece centres on the topics in Cerny's discussion. There's much to assimilate here, but what you won't get are any further revelations about PlayStation 5 strategy - and it's not for want of asking. In our prior meeting back in 2016, Cerny talked in depth about how Sony was wedded to the concept of the console generation and the hardware revealed certainly attests to that. So is cross-gen development a thing for first-party developers? While stressing again that he's all in on the concept of console generations (as opposed to PC-style, more gradual innovation) he wasn't going to talk software strategy, and to be fair, that's not really his area.

Cerny also delivered PlayStation 4 - which he defined as 'super-charged PC architecture' way back in 2013. It was an approach that helped deliver a developer-friendly multi-platform golden age... but is PlayStation 5 a return to the more 'exotic' philosophy we saw in prior generation console design? Cerny shared little, except to say that PS5 design is easy for PlayStation 4 developers to get to grips with, but digging deeper into the new system's capabilities, there are many aspects of the PS5 design that PCs will be hard-pressed to match.

However, in going deeper on the topics covered in his developer presentation, Mark Cerny comes alive. There's an obvious, genuine passion and enthusiasm for the hardware he has helped to develop - and that's where you'll get maximum value in this article. In our online meeting, we cover a range of topics:

- PlayStation 5's innovative boost clock - how does it actually work?

- What was required from a CPU perspective to deliver backwards compatibility?

- What are the crucial advantages of the SSD and how are they delivered?

- How does 3D audio actually work - and just how powerful is the Tempest engine?

- How does the new 3D audio system interface with TV speakers and 5.1/7.1 surround set-ups?

What follows is undoubtedly deep and on the technical side - a chance to more fully explore some of the topics raised in the presentation. A few times throughout the conversation, Cerny suggested further research, one of the reasons we didn't (indeed, couldn't) go live straight away after the event. Needless to say, before going on, I would highly recommend watching Mark's presentation in its entirety, if you haven't already. It's right here.

PlayStation 5's boost clocks and how they work

One of the areas I was particularly interested to talk about was the boost clock of the PlayStation 5 - an innovation that essentially gives the system on chip a set power budget based on the thermal dissipation of the cooling assembly. Interestingly, in his presentation, Mark Cerny acknowledged the difficulties of cooling PlayStation 4 and suggested that having a maximum power budget actually made the job easier. "Because there are no more unknowns, there's no need to guess what power consumption the worst case game might have," Cerny said in his talk. "As for the details of the cooling solution, we're saving them for our teardown, I think you'll be quite happy with what the engineering team came up with."

Regardless, the fact is that there is a set power level for the SoC. Whether we're talking about mobile phones, tablets, or even PC CPUs and GPUs, boost clocks have historically led to variable performance from one example to the next - something that just can't happen on a console. Your PS5 can't run slower or faster than your neighbour's. The developmental challenges alone would be onerous to say the least.

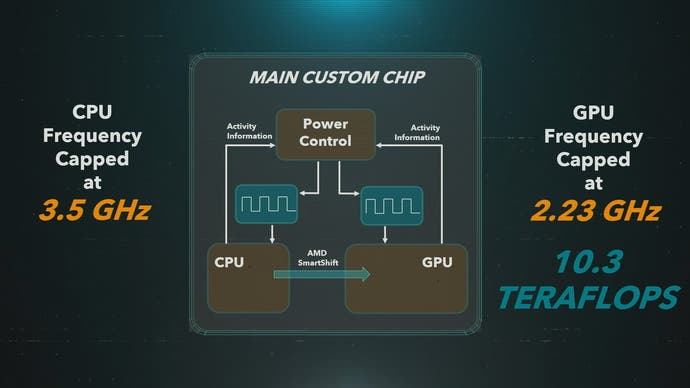

"We don't use the actual temperature of the die, as that would cause two types of variance between PS5s," explains Mark Cerny. "One is variance caused by differences in ambient temperature; the console could be in a hotter or cooler location in the room. The other is variance caused by the individual custom chip in the console, some chips run hotter and some chips run cooler. So instead of using the temperature of the die, we use an algorithm in which the frequency depends on CPU and GPU activity information. That keeps behaviour between PS5s consistent."

Inside the processor is a power control unit, constantly measuring the activity of the CPU, the GPU and the memory interface, assessing the nature of the tasks they are undertaking. Rather than judging power draw based on the nature of your specific PS5 processor, a more general 'model SoC' is used instead. Think of it as a simulation of how the processor is likely to behave, and that same simulation is used at the heart of the power monitor within every PlayStation 5, ensuring consistency in every unit.

"The behaviour of all PS5s is the same," says Cerny. "If you play the same game and go to the same location in the game, it doesn't matter which custom chip you have and what its transistors are like. It doesn't matter if you put it in your stereo cabinet or your refrigerator, your PS5 will get the same frequencies for CPU and GPU as any other PS5."

Feedback from developers saw two areas where developers had issues - the concept that not all PS5s will run in the same way, something that the Model SoC concept addresses. The second area was the nature of the boost. Would frequencies hit a peak for a set amount of time before throttling back? This is how smartphone boost tends to operate.

"The time constant, which is to say the amount of time that the CPU and GPU take to achieve a frequency that matches their activity, is critical to developers," adds Cerny. "It's quite short, if the game is doing power-intensive processing for a few frames, then it gets throttled. There isn't a lag where extra performance is available for several seconds or several minutes and then the system gets throttled; that isn't the world that developers want to live in - we make sure that the PS5 is very responsive to power consumed. In addition to that the developers have feedback on exactly how much power is being used by the CPU and GPU."

Mark Cerny sees a time where developers will begin to optimise their game engines in a different way - to achieve optimal performance for the given power level. "Power plays a role when optimising. If you optimise and keep the power the same you see all of the benefit of the optimisation. If you optimise and increase the power then you're giving a bit of the performance back. What's most interesting here is optimisation for power consumption, if you can modify your code so that it has the same absolute performance but reduced power then that is a win. "

In short, the idea is that developers may learn to optimise in a different way, by achieving identical results from the GPU but doing it faster via increased clocks delivered by optimising for power consumption. "The CPU and GPU each have a power budget, of course the GPU power budget is the larger of the two," adds Cerny. "If the CPU doesn't use its power budget - for example, if it is capped at 3.5GHz - then the unused portion of the budget goes to the GPU. That's what AMD calls SmartShift. There's enough power that both CPU and GPU can potentially run at their limits of 3.5GHz and 2.23GHz, it isn't the case that the developer has to choose to run one of them slower."

"There's another phenomenon here, which is called 'race to idle'. Let's imagine we are running at 30Hz, and we're using 28 milliseconds out of our 33 millisecond budget, so the GPU is idle for five milliseconds. The power control logic will detect that low power is being consumed - after all, the GPU is not doing much for that five milliseconds - and conclude that the frequency should be increased. But that's a pointless bump in frequency," explains Mark Cerny.

At this point, the clocks may be faster, but the GPU has no work to do. Any frequency bump is totally pointless. "The net result is that the GPU doesn't do any more work, instead it processes its assigned work more quickly and then is idle for longer, just waiting for v-sync or the like. We use 'race to idle' to describe this pointless increase in a GPU's frequency," explains Cerny. "If you construct a variable frequency system, what you're going to see based on this phenomenon (and there's an equivalent on the CPU side) is that the frequencies are usually just pegged at the maximum! That's not meaningful, though; in order to make a meaningful statement about the GPU frequency, we need to find a location in the game where the GPU is fully utilised for 33.3 milliseconds out of a 33.3 millisecond frame.

"So, when I made the statement that the GPU will spend most of its time at or near its top frequency, that is with 'race to idle' taken out of the equation - we were looking at PlayStation 5 games in situations where the whole frame was being used productively. The same is true for the CPU, based on examination of situations where it has high utilisation throughout the frame, we have concluded that the CPU will spend most of its time at its peak frequency."

Put simply, with race to idle out of the equation and both CPU and GPU fully used, the boost clock system should still see both components running near to or at peak frequency most of the time. Cerny also stresses that power consumption and clock speeds don't have a linear relationship. Dropping frequency by 10 per cent reduces power consumption by around 27 per cent. "In general, a 10 per cent power reduction is just a few per cent reduction in frequency," Cerny emphasises.

It's an innovative approach, and while the engineering effort that went into it is likely significant, Mark Cerny sums it up succinctly: "One of our breakthroughs was finding a set of frequencies where the hotspot - meaning the thermal density of the CPU and the GPU - is the same. And that's what we've done. They're equivalently easy to cool or difficult to cool - whatever you want to call it."

There's likely more to discover about how boost will influence game design. Several developers speaking to Digital Foundry have stated that their current PS5 work sees them throttling back the CPU in order to ensure a sustained 2.23GHz clock on the graphics core. It makes perfect sense as most game engines right now are architected with the low performance Jaguar in mind - even a doubling of throughput (ie 60fps vs 30fps) would hardly tax PS5's Zen 2 cores. However, this doesn't sound like a boost solution, but rather performance profiles similar to what we've seen on Nintendo Switch. "Regarding locked profiles, we support those on our dev kits, it can be helpful not to have variable clocks when optimising. Released PS5 games always get boosted frequencies so that they can take advantage of the additional power," explains Cerny.

But what if developers aren't going to optimise specifically to PlayStation 5's power ceiling? I wondered whether there were 'worst case scenario' frequencies that developers could work around - an equivalent to the base clocks PC components have. "Developers don't need to optimise in any way; if necessary, the frequency will adjust to whatever actions the CPU and GPU are performing," Mark Cerny counters. "I think you're asking what happens if there is a piece of code intentionally written so that every transistor (or the maximum number of transistors possible) in the CPU and GPU flip on every cycle. That's a pretty abstract question, games aren't anywhere near that amount of power consumption. In fact, if such a piece of code were to run on existing consoles, the power consumption would be well out of the intended operating range and it's even possible that the console would go into thermal shutdown. PS5 would handle such an unrealistic piece of code more gracefully."

Right now, it's still difficult to get a grip on boost and the extent to which clocks may vary. There has also been some confusion about backwards compatibility, where Cerny's comments about running the top 100 PlayStation 4 games on PS5 with enhanced performance were misconstrued to mean that only a relatively small amount of titles would run at launch. This was clarified a couple of days later (expect thousands of games to run) but the nature of backwards compatibility on PlayStation 5 is fascinating.

PlayStation 4 Pro was built to deliver higher performance than its base counterpart in order to open the door to 4K display support, but compatibility was key. A 'butterfly' GPU configuration was deployed which essentially doubled up on the graphics core, but clock speeds aside, the CPU had to remain the same - the Zen core was not an option. For PS5, extra logic is added to the RDNA 2 GPU to ensure compatibility with PS4 and PS4 Pro, but how about the CPU side of the equation?

"All of the game logic created for Jaguar CPUs works properly on Zen 2 CPUs, but the timing of execution of instructions can be substantially different," Mark Cerny tells us. "We worked to AMD to customise our particular Zen 2 cores; they have modes in which they can more closely approximate Jaguar timing. We're keeping that in our back pocket, so to speak, as we proceed with the backwards compatibility work."

The proprietary SSD - how it works and what it delivers

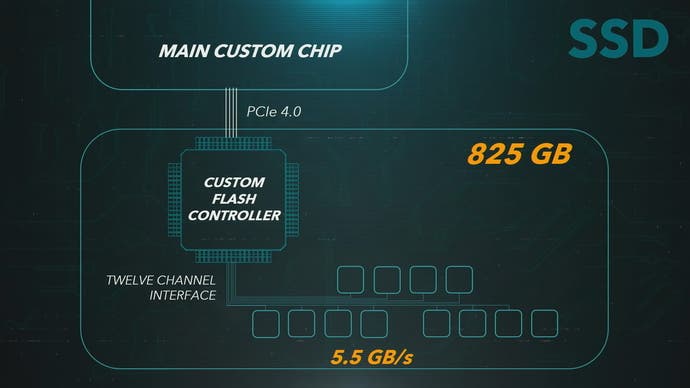

From the very first PlayStation 5 reveal in Wired, Sony has spent a lot of time evangelising its SSD - the solid-state storage solution that will be transformative not just in terms of loading times, but in how games will be able to deliver bigger, more detailed worlds and much more dynamic use of memory. With an impressive 5.5GB/s of raw bandwidth alongside hardware accelerated decoding (boosting effective bandwidth to around 8-9GB/s), PlayStation 5's SSD is clearly a point of pride for Mark Cerny and his team.

There's low level and high level access and game-makers can choose whichever flavour they want - but it's the new I/O API that allows developers to tap into the extreme speed of the new hardware. The concept of filenames and paths is gone in favour of an ID-based system which tells the system exactly where to find the data they need as quickly as possible. Developers simply need to specify the ID, the start location and end location and a few milliseconds later, the data is delivered. Two command lists are sent to the hardware - one with the list of IDs, the other centring on memory allocation and deallocation - i.e. making sure that the memory is freed up for the new data.

With latency of just a few milliseconds, data can be requested and delivered within the processing time of a single frame, or at worst for the next frame. This is in stark contrast to a hard drive, where the same process can typically take up to 250ms. What this means is that data can be handled by the console in a very different way - a more efficient way. "I'm still working on games. I was a producer on Marvel's Spider-Man, Death Stranding and The Last Guardian," says Mark Cerny. "My work was on a mixture of creative and technical issues - so I pick up a lot of insight as to how systems are working in practice."

One of the biggest issues is how long it takes to retrieve data from the hard drive and what this means for developers. "Let's say an enemy is going to yell something as it dies, which can be issued as an urgent cut-in-front-of-everybody else request, but it's still very possible that it takes 250 milliseconds to get the data back due to all of the other game and operating requests in the pipeline," Cerny explains. "That 250 milliseconds is a problem because if the enemy is going to yell something as it dies, it needs to happen pretty much instantaneously; this kind of issue is what forces a lot of data into RAM on PlayStation 4 and its generation."

In short, to get instant access to urgent data, more of it needs to be stored in RAM on the current generation consoles - opening the door to a huge efficiency saving for next-gen. The SSD alleviates a lot of the burden simply because data can be requested as it's needed as opposed to caching a bunch of it that the console may need... but may not. There are further efficiency savings because duplication is no longer needed. Much of a hard drive's latency is a factor of the fact that a mechanical head is moving around the surface of the drive platter. Finding data can take as long - or longer - as reading it. Therefore, the same data is often duplicated hundreds of times simply to ensure that the drive is occupied with reading data as opposed to wasting time looking for it (or "seeking" it).

"Marvel's Spider-Man is a good example of the city block strategy. There are higher LOD and lower LOD representations for about a thousand blocks. If something is used a lot it's in those bundles of data a lot," says Cerny.

Without duplication, drive performance drops through the floor - a target 50MB/s to 100MB/s of data throughput collapsed to just 8MB/s in one game example Cerny looked at. Duplication massively increases throughput, but of course, it also means a lot of wasted space on the drive. For Marvel's Spider-Man, Insomniac came up with an elegant solution, but once again, it leaned heavily on using RAM.

"Telemetry is vital in spotting issues with such a system, for example, telemetry showed that the city database jumped in size by a gigabyte overnight. It turned out the cause was 1.6MB of trash bags - that's not a particularly large asset - but the trash bags happened to be included in 600 city blocks," explains Mark Cerny. "The Insomniac rule is that any asset used more than four hundred times is resident in RAM, so the trash bags were moved there, though clearly there's a limit to how many assets can reside in RAM."

It's another example of how the SSD could prove transformative to next-gen titles. The install size of a game will be more optimal because duplication isn't needed; those trash bags only need to exist once on the SSD - not hundreds or thousands of times - and would never need to be resident in RAM. They will load with latency and transfer speeds that are a couple of orders of magnitude faster, meaning a 'just in time' approach to data delivery with less caching.

Behind the scenes, the SSD's dedicated Kraken compression block, DMA controller, coherency engines and I/O co-processors ensure that developers can easily tap into the speed of the SSD without requiring bespoke code to get the best out of the solid-state solution. A significant silicon investment in the flash controller ensures top performance: the developer simply needs to use the new API. It's a great example of a piece of technology that should deliver instant benefits, and won't require extensive developer buy-in to utilise it.

3D Audio - the power of the Tempest engine

Sony's plans for 3D audio are expansive and ambitious - unprecedented, even. Put simply, PlayStation 5 sees the platform holder pushing surround significantly beyond anything we've seen in the gaming space before, comprehensively out-speccing Dolby Atmos in the process by theoretically processing hundreds of discrete sound sources in 3D space, not just the 32 in the Atmos spec. It's also about delivering that sound without requiring bespoke audio equipment. In effect, Sony is looking to break boundaries with audio and democratise it too.

Increased precision in surround sound has been an evolutionary process from PlayStation 3 to PS4 and into PlayStation VR, which is capable of supporting around 50 3D sound sources. Looking back at this interview with Sony's Garry Taylor and Simon Gumbleton, it's fascinating to see that many of the foundations on which PlayStation 5 audio are based started to come to the fore with PSVR, including early use of the Head-Related Transfer Function - the HRTF.

In general, the scale of the task in dealing with game audio is already extraordinary - not least because audio is processed at 48000Hz with 256 samples, meaning there are 187.5 audio 'ticks' per second - meaning new audio needs to be delivered every 5.3ms. Bear that in mind when considering the weight of the data Sony's processor works through per tick.

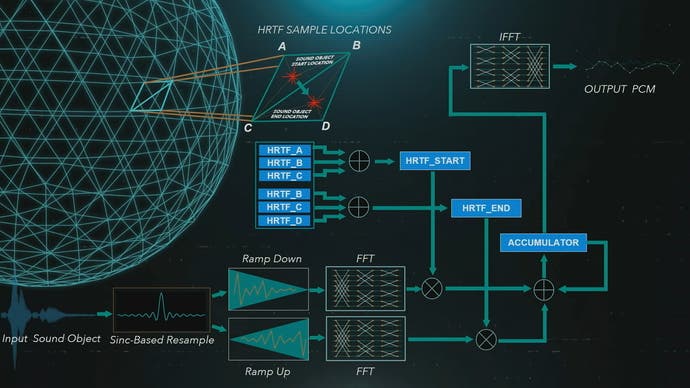

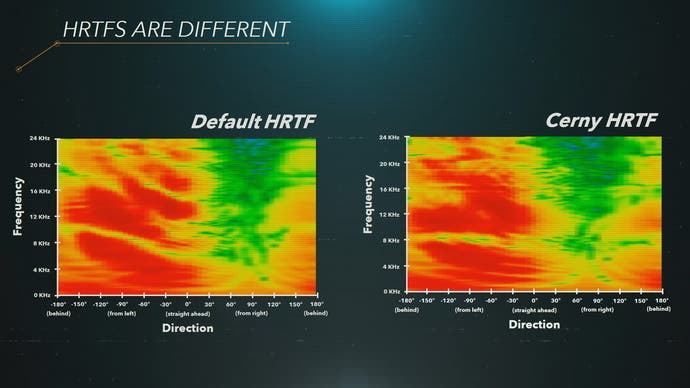

And this is where the HRTF makes its way into the discussion for PS5 audio. In his presentation, Mark Cerny showed his own HRTF, which is essentially a table that maps how audio is perceived, filtered via variables such as the size and shape of the head and the contours of the ear. Perhaps what wasn't so clear is that our ears are not identical, meaning that positioning track actually needs to be parsed through two HRTFs - one per ear.

"If the HRTF discussion is a bit brain bending, there are a few concepts regarding sound localisation that are a bit simpler to describe, namely the ILD and the ITD," explains Mark Cerny. "The ILD is the interaural level difference, which is to say the difference in the intensity of the sound reaching each ear. It varies by frequency and location; if the sound source is on my right, then my left ear will hear low frequencies less and high frequencies a whole lot less, because low frequency sounds can diffract around the head but high frequency sounds can't - they don't bend, they bounce. And so the ILD varies based on wherever the sound is coming from and the frequency of the sound, as well as the size of your head and the shape of your head. The ITD - the interaural time delay - is how long it takes for the sound to hit your right ear versus your left ear.

"Clearly, if the sound source is in front of you, the interaural time delay is zero. But if the sound source is to your right, there's a delay that is roughly the speed of sound divided by the distance between your ears. The HRTF that we use in the 3D audio algorithms encapsulates the ILD and ITD, as well as a bit more."

The HRTF essentially delivers a 3D grid with values that can be used to place an object's position according to the IAD and ITD, but it does not have granularity to accommodate every single position. Making the process trickier still is that the human brain is capable of incredible precision and so the algorithms here need to be remarkably effective.

"The way we know whether our algorithms aren't working well is through the use of pink noise, it's similar in concept to white noise (which I think we're all familiar with). We use a sound source that is pink noise, and move it around, if we hear the flavour of that sound source changing as it moves that means there is an inaccuracy in our algorithms," says Cerny.

Essentially, pink sound is white sound that has been filtered to approximate the human ear's frequency response. If the algorithm is inaccurate, you'll hear phasing artefacts - similar to the kind of effect you get putting a shell over your ear. This was one of the limitations of PlayStation VR's 3D audio processing, but thanks to the extra power of the PlayStation 5's Tempest engine, the algorithms deliver more precision, enabling cleaner, more realistic, more believable sound.

In truth, this barely covers the scale and scope of the mathematics carried out here. "The reason for the overwhelming HRTF processing diagram in the presentation was that I wanted to get out in front of you the complexity of what's required for accurate processing of a moving sound, and through that the rationale for why we constructed a dedicated unit for audio processing," adds Mark Cerny. "Essentially, we wanted to be able to throw an indefinite amount of power at whatever issues we faced. Or to put that differently, we didn't want the cost of a particular algorithm to be the reason for choosing that algorithm, we wanted to be able to focus simply on the quality of the resulting effect."

The Tempest engine itself is, as Cerny explained in his presentation, a revamped AMD compute unit, which runs at the GPU's frequency and delivers 64 flops per cycle. Peak performance from the engine is therefore in the region of 100 gigaflops, in the ballpark of the entire eight-core Jaguar CPU cluster used in PlayStation 4. While based on GPU architecture, utilisation is very, very different.

"GPUs process hundreds or even thousands of wavefronts; the Tempest engine supports two," explains Mark Cerny. "One wavefront is for the 3D audio and other system functionality, and one is for the game. Bandwidth-wise, the Tempest engine can use over 20GB/s, but we have to be a little careful because we don't want the audio to take a notch out of the graphics processing. If the audio processing uses too much bandwidth, that can have a deleterious effect if the graphics processing happens to want to saturate the system bandwidth at the same time."

Essentially, the GPU is based on the principle of parallelism - the idea of running many tasks (or waves) simultaneously. The Tempest engine is much more serial-like in nature, meaning that there's no need for attached memory caches. "When using the Tempest engine, we DMA in the data, we process it, and we DMA it back out again; this is exactly what happens on the SPUs on PlayStation 3," Cerny adds. "It's a very different model from what the GPU does; the GPU has caches, which are wonderful in some ways but also can result in stalling when it is waiting for the cache line to get filled. GPUs also have stalls for other reasons, there are many stages in a GPU pipeline and each stage needs to supply the next. As a result, with the GPU if you're getting 40 per cent VALU utilisation, you're doing pretty damn well. By contrast, with the Tempest engine and its asynchronous DMA model, the target is to achieve 100 percent VALU utilisation in key pieces of code."

The Tempest engine is also compatible with Ambisonics, which is effectively a virtual speaker system which maps on to physical speakers. An enhanced feeling of presence is generated because any given sound can be rendered at one of 36 volume levels per speaker and it is likely to be represented at some level on all speakers. Discrete audio tends to 'lock' to physical speakers and may not be represented at all on some of them. Ambisonics is available on PlayStation 4 and PSVR right now, but with fewer virtual speakers, so there's already a big upgrade in precision via the Tempest engine - and it can be matched with Sony's more precise localisation too.

"We're beginning to see strategies for game audio where the type of processing depends on the particular sound source," say Cerny. "For example, a 'hero sound' (by which I mean an important sound, not literally a sound made by the player hero) will get 3D object treatment for ideal locality, while the majority of the sounds in the scenes go through Ambisonics for a higher level of control of sound level. With that kind of hybrid approach, you can theoretically get the best of both worlds. And since both of these are running through the same HRTF processing at the end of the audio pipeline, both can get that same marvelous sense of presence."

How PS5's 3D audio connects to your audio hardware

In the PlayStation 5 presentation, it was noted that rolling out 3D audio may take some time. While the core technology is in place for developers, piping out results to users who use various speaker systems is still a work in progress. At launch, users with standard headphones should get the complete experience as intended. Things aren't quite so simple for those using TV speakers, soundbars or 5.1/7.1 surround systems.

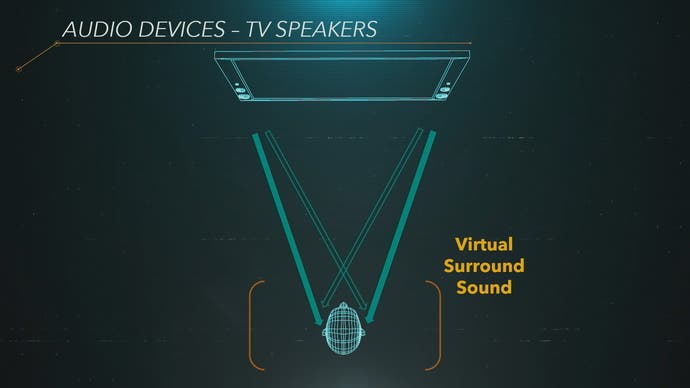

"With TV speakers and stereo speakers, the user can choose to enable or disable 'TV Virtual Surround,' so the audio pipeline needs to be able to produce audio that does not have the 3D aspects that I was talking about," Mark Cerny explains. "Virtual surround sound works in a sweet spot, and the user might not be seated in that sweet spot, or the user might be playing couch co-op (hard to fit both players in the sweet spot), etc. When virtual surround sound is enabled, HRTF based algorithms are used. When it is disabled, a simple downmix is performed - eg the location of a 3D sound object determines to what degree its sound comes from the left speaker and to what degree it comes from the right speaker."

As he mentioned in his presentation, there is a basic implementation for TV and stereo speakers up and running and the PlayStation 5 hardware team continues to optimise it.

"Once we're satisfied with our solution for these two channel systems we will turn to the issue of 5.1 and 7.1 systems," adds Cerny. "For now, though the 5.1 and 7.1 channel systems get a solution that approximates what we have now on PS4, which is to say the locations of the sound objects determine to what degree their sounds come out of each speaker. Note that 5.1 and 7.1 channel support is going to have its own special issues, in my talk I mentioned that with two channel systems the left ear can hear the right speaker and vice versa - it's even more complex with six or eight channels! Also note that if a developer is interested in using the Tempest engine power to support six or eight channels, game code is aware of the speaker setup so bespoke support is quite possible."

Where next for PlayStation 5?

There's still a lot we don't know about PlayStation 5. In his presentation, Mark Cerny mentioned that a teardown will happen at some point in the future, which is where we'll get our first look at the thermal assembly - a key component in PlayStation 5 that plays a role in defining the actual form-factor of the machine, which hopefully we will see sooner!

And at the nuts and bolts level, there are still some lingering question marks. Both Sony and AMD have confirmed that PlayStation 5 uses a custom RDNA 2-based graphics core, but the recent DirectX 12 Ultimate reveal saw AMD confirm features that Sony has not, including variable rate shading. Then there's the gulf between specs and execution - what Sony has shared in terms of specs is really impressive, but the proof of the pudding is always in the tasting. Aside from some wobbly-cam footage of Marvel's Spider-Man running on what is now an outdated dev kit, we've not seen a single rendered pixel.

And that's what I really want to see next from Sony - a slice of the PlayStation 5 experience. By this time in the run-up to launch of PS4, we'd already seen Killzone Shadowfall running and it looked magnificent (truth is, it still does) and yes, while some game code would be welcome, I actually feel that the surrounding experience is just as important. How fast is system boot-up? Is game loading truly instant? Is there an equivalent to Series X's impressive quick resume? Do PS4 titles with unlocked frame-rates (eg InFamous Second Sun, Killzone Shadowfall) lock to 60 frames per second on PS5? The more you think about it, the more questions arise - a reminder that as deep as we've gone into the PS5 system architecture, this really is just the beginning.